Scientist impact factor (SIF): a new metric for improving scientists’ evaluation?

Introduction

The publication of scientific research is the mainstay for broad knowledge dissemination, but is also an essential criterion of scientists’ evaluation for recruiting funds and career progression (1). In many academic systems around the world the individual scientific output is conventionally evaluated by using some objective science metrics, which would allow reliable benchmark and fair comparison. The most widespread approach includes the use of the so-called H-index, the total impact factor (IF), along with the overall number of citations (2).

The H-index is a combined measure of productivity and citation impact of scientists’ publications, and is conventionally calculated as the number of publications (i.e., “np”) which have received at least an identical number of citations (i.e., “n”) each (3). As an example, a H-index of 20 means that at least 20 scientific publications have received 20 or more citations each, whereas an H-index of 100 implies that at least 100 scientific publications have received 100 or more citations each. To put it simple, the bigger is the H-index of a scientist, the larger is the predictable impact of his/her publications on the scientific community. Despite this measure has some valuable advantages (4), some clear drawbacks can also be emphasized, such as the fact that the H-index varies widely according to the scientific database used for its calculation (5), it does not reflect the real contribution of the scientist in the publication (i.e., the scientist’ position in the list of authors is not considered) and, last but not least, is dependent on the ageing of the publication. In fact, the overall H-index is a measure of long-term scientific output, and it is only scarcely influenced by recent publications. The use of the overall IF of scientist’s publications is also questionable. As for the H-index, the total IF does not truly mirror his/her active contribution to the published research and largely depends on the IF of the journal, which may increase or decrease independently from the quality of the article published by the single scientist (6). Another important aspect is the fact that the IF of the journal in which an article has been published does not really mirrors the success of that article, since the IF is only calculated according to the overall number of citations to all the articles published in that journal. The last widely used metric, i.e., the total number of citations, has also an inherent drawback since, as for the H-index, is an overall career’s measure, does not reflect recent scientific productivity and may be more dependent on the overall number rather than on the quality of the publications.

Due to the clear limitations of conventional science metrics, the process of scientists’ evaluation remains quite challenging (7). This is particularly evident when applying for competitive funding, wherein none of these metrics truly reflect recent scientific output. Therefore, we propose here a new science metrics, which we have tentatively called “scientist impact factor” (SIF), and which is aimed to overcome some of the caveats of, and thereby complement, the currently used science indices.

Methods

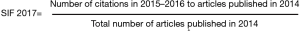

The principle for calculating the SIF is more or less the same as that used for estimating the journal IF, which has originally been proposed by Garfield decades ago (8). Briefly, the SIF is calculated as all citations of articles published in the two years following the publication year of the articles, divided by the overall number of articles published in that year. As an example, the SIF for the year 2017 is obtained by dividing all citations in the years 2015–2016 to articles published in the year 2004, divided by the overall number of articles published in the year 2014 (Figure 1). This calculation should hence overcome at least two major drawbacks of the H-index and of the overall number of citations, wherein (I) the total number of recent citations is normalized according to the number of recently published articles, so limiting the bias emerging from publishing a large number of scarcely cited articles; and (II) the output measure reliably reflects the recent scientific impact of the scientist, so complementing an overall career indicator, such as the H-index.

The SIF has then been tested by analyzing the data of the 40 top scientists of the University of Verona, Italy (ranked according their individual Scopus H-index). Briefly, the individual H-index, the number of articles published in the 2014 and the overall citations to these articles in the following two years (i.e., 2015–2016) have been retrieved from Scopus (Scopus, Elsevier BV, Amsterdam, Netherlands), which is the largest validated scientific database (9). The relationship between the total H-index, the 2 years H-index (i.e., for the years 2015–2016) and the SIF was then analyzed with Pearson’s correlation, using Analyse-it (Analyse-it Software Ltd., Leeds, UK).

Results

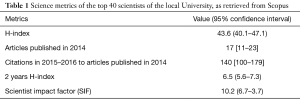

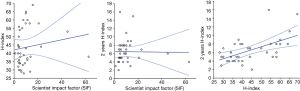

The main science metrics of the top 40 scientists of the local University retrieved from Scopus are shown in Table 1. The results of the Pearson’s correlation are shown in Figure 2. Briefly, no correlation was found between SIF and H-index (r=0.15; 95% CI, −0.17 to 0.44; P=0.367) or 2 years H-index (r=−0.01; 95% CI, −0.32 to 0.30; P=0.933), whereas the H-index and 2 years H-index values were found to be highly correlated (r=0.57; 95% CI, 0.32 to 0.75; P<0.001), thus meaning that these two measures actually provide a rather similar indication of scientific impact. Notably, a highly significant correlation was also observed between the articles published in the 2014 and the total number of citations in 2015–2016 to these articles (r=0.62; 95% CI, 0.38 to 0.78; P<0.001).

Full table

Discussion

Due to the unprecedented financial crisis which has plagued many Governments worldwide, the accession to academic positions and research grants is becoming much more challenging than before (10). Therefore, the use of reliable science metrics by competitive funding systems and Universities will be gaining ever increasing importance for allowing an expectantly fair and objective scientists’ evaluation.

Our analysis about the possible use of the new SIF science metric highlights some important aspects. Quite surprisingly, we first observed the existence of a highly significant correlation between the individual values of the H-index and the 2 years H-index (Figure 2). This actually means that the use of the latter science metric is somehow useless, if not possibly redundant, when the overall H-index is already applied for evaluating scientists. This is probably reflected by the fact that the scientists of the local University tended to be quite constant in publishing highly-cited articles, so that the introduction of an identically calculated index aimed to reflect a more recent scientific impact may be seen as unnecessary. The complete lack of correlation between the SIF and the 2 years H-index is instead the most intriguing finding (Figure 2). Inherently, this actually means that the number of highly-cited recent articles does not actually predicts the normalized number of recent citations over the same period. Therefore, this lack of correlation would suggest that two science metrics are not complementary, so that the use of the SIF may provide an add value in the process of scientists’ evaluation. A similar consideration can be made about the lack of correlation observed between SIF and overall H-index (Figure 2), which also means that the recent scientific impact normalized for the number of published documents is not actually predicted by the number of highly-cited articles published by the same scientist throughout his/her scientific career.

Taken together, the results of our analysis suggest that the use of the SIF may have some significant implications. As for the more conventional journal IF, the SIF does not simply reflect the number of citations to recent articles, but also introduces the important concept of normalizing these citations for the total number of articles published in the previous year. This aspect is especially important considering that a highly significant correlation was found between the total number of articles published in 2014 and the total number of citations to these articles in the ensuing two years, which essentially means that the number of recently published articles is a significant predictor of the number of following citations. The use of the SIF may hence be useful for reducing the possible bias due to obtaining a high number of citations only because the scientist has published a large number of relatively low-impact articles. The specific formula used for calculating the SIF, which is rather similar to that used for estimating the journal IF, also allows obtaining reliable information about the “real” scientific impact of a single scientist, wherein it only reflects recent scientific impact and is not influenced by past achievements throughout a long academic career. Therefore, the use of SIF would be especially helpful for young scientists, wherein this metrics reflects the scientific output over the past two years, thus giving them more chances to apply to and obtain competitive funding or progressing in their Academic career.

Conclusions

Although the H-index, the total IF and the overall number of citations will expectedly remain the milestone for scientists’ evaluation, we believe that the implementation of the SIF may be seen as a valuable opportunity for enabling a more fair, objective and reliable analysis of recent scientific impact.

Acknowledgements

None.

Footnote

Conflicts of Interest: The authors have no conflicts of interest to declare.

References

- Abbott A, Cyranoski D, Jones N, et al. Metrics: Do metrics matter? Nature 2010;465:860-2. [Crossref] [PubMed]

- Van Noorden R. Metrics: A profusion of measures. Nature 2010;465:864-6. [Crossref] [PubMed]

- Hirsch JE. An index to quantify an individual's scientific research output. Proc Natl Acad Sci U S A 2005;102:16569-72. [Crossref] [PubMed]

- Lippi G, Borghi L. A short story on how the H-index may change the fate of scientists and scientific publishing. Clin Chem Lab Med 2014;52:e1-3. [Crossref] [PubMed]

- Lippi G, Mattiuzzi C. The challenges of evaluating scientists by H-index and citations in different biomedical research platforms. Clin Chim Acta 2013;421:57-8. [Crossref] [PubMed]

- Lippi G. The impact factor for evaluating scientists: the good, the bad and the ugly. Clin Chem Lab Med 2009;47:1585-6. [Crossref] [PubMed]

- Lane J. Let's make science metrics more scientific. Nature 2010;464:488-9. [Crossref] [PubMed]

- Garfield E. Citation indexes to science: a new dimension in doc-umentation through association of ideas. Science 1955;122:108-11. [Crossref] [PubMed]

- Lippi G, Favalor EJ, Simundic AM. Biomedical research platforms and their influence on article submissions and journal rankings: an update. Biochem Med (Zagreb) 2012;22:7-14. [Crossref] [PubMed]

- Escolar-Pujolar A, Bacigalupe A, San Sebastian M. European economic crisis and health inequities: research challenges in an uncertain scenario. Int J Equity Health 2014;13:59. [Crossref] [PubMed]