A deep learning, image based approach for automated diagnosis for inflammatory skin diseases

Introduction

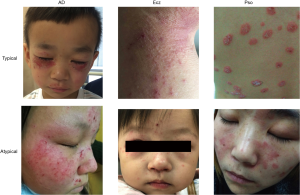

Inflammatory skin diseases refer to the skin disorder involving inflammatory cell infiltration and inflammatory cytokine dramatically elevated. Inflammatory skin diseases affect more than 1/5 human population in the worldwide. Inflammatory skin diseases include psoriasis (Pso), eczema (Ecz), and atopic dermatitis (AD), etc. Dermatologists usually diagnose these diseases by “first impression” and then follow pathological analysis and laboratory tests to confirm the “first impression”. However, less experienced dermatologists and young dermatologists are particularly susceptible to errors since Pso, Ecz and AD are very easily to be misdiagnosed (Figure 1). To solve this problem and assist dermatologists, in this study, we developed an end-to-end deep learning model, which is based on clinical skin images, for automated diagnosis of Pso, Ecz and AD.

Convolutional neural networks (CNNs) have shown great power for the analysis of clinical images, and an increasing number of studies have reported promising results for CNNs in a variety of diseases. For example, CNNs have been applied to assist in the early diagnosis and detection of Alzheimer disease from brain electroencephalogram (EGG) spectral images and MRIs (1,2), to predict the risk of osteoarthritis from knee cartilage MRIs (3), for segmentation of multiple sclerosis lesions from multi-channel 3D MRIs (4), to diagnosis breast nodules and lesions from ultrasound images (5), and for the detection of diabetic retinopathy from retinal fundus photographs (6).

The development of CNNs in dermatology stem from the development of pioneering technologies for assisting in melanoma diagnosis (7,8). There are now multiple examples of AI tools facilitating cancer diagnosis based on data input from dermoscopes and from histological images of skin biopsy tissues (9-11). However, to the best of our knowledge, we are unaware of any applications of AI tools to assist in diagnosing skin diseases other than cancers. Here, we report our development of a smartphone-based platform to assist the Pso, Ecz and AD diagnosis. In our study, Ecz and AD were assigned into one group based on the fact that these two diseases cannot be distinguished by their appearance solely but by the combination of laboratory test results and disease history (12).

Methods

Dataset

Our dataset (all images) comes from clinical images from the Department of Dermatology, The Second Xiangya Hospital, Central South University, China.

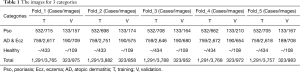

Data preparation

Our dataset contains sets of images corresponding to the same lesion but from multiple viewpoints, or multiple images of similar lesions on the same person. While this is useful for training, extensive care needs to be taken to ensure that these sets are not split between the training and validation sets. Considering the size of our datasets, we use five-fold cross-validation to validate the effectiveness of our algorithm. In each fold, the validation set is a randomly chosen subset that contains around 20% of the cases. All images used in training and validation have been double checked and confirmed by experienced dermatologists (shown in Table 1).

Full table

All images are processed with the following procedures before they are stored in the database and for further experiments:

Step 1: data cleaning

Firstly, the originally collected images get managed into cases for future use. All the review and examination work (for example, delete duplicate case and remove blur/far-away images) will be executed by case instead of individual image after this step.

Step 2: data structuring and standardization

Secondly, the information of these cases need to be managed properly by an established structure and labeled with uniformed description language. We set a series standard description for both images and cases from different dimensions.

For each case, we set three parts of information to describe the case in detail.

- Upload information: including name of who, date, and how to upload this case and which organization it belongs to, etc.

- Case information: including case number/patient ID/patient name/gender/original diagnosis, etc.

- Review information: including review status and which expert give the final diagnosis.

For individual image, besides the case information, we also set two parts of information exclusively to describe the image in detail.

- Disease information: including lesion description appear on each image and remark record from physicians, etc.

- Picture information: including picture type, Secure Hash Algorithm 1 (SHA-1) number for each image, shooting time, picture size, etc.

Implementation details

We implemented our model with Pytorch 1.1. Our training and validation process was conducted via an 18 Core Intel® Xeon® CPU E5-2697 and 4 RTX 2080Ti NVIDIA GPUs.

Training algorithm

We utilized Google’s EfficientNet-b4 with pre-trained weights on ImageNet which achieved a 96.3% top-5 accuracy on the 2015 ImageNet dataset with 1,000 object categories of about 1.28 million images (13). The final fully-connected classification layer was replaced by with 3 output neurons. Besides, we also added 7 auxiliary classifiers at the end of each intermediate layer to make the model learn classification information from different levels of features. The modified model was retrained with our own dataset consisting of 4,740 dermatoscope images categorized in 3 classes.

Our modified EfficientNet-b4 architecture is shown in Figure 2. Data flow is from left to right: a skin image is put into the network and finally transformed into a probability distribution over clinical classes of skin disease using our modified EfficientNet-b4 architecture.

The deep learning framework of our network is implemented via using Pytorch. A global learning rate of 0.01 is used initially and it decays by 0.1 every 20 epochs. We use mini-batch gradient descent with momentum 0.9 as model parameters optimizer. During training, each image is resized to 380×380 pixels in RGB channels which is the optimized input size of EfficientNet-b4. For each epoch, each image will be rotated from −30 degrees to 30 degrees randomly, together with 50% probabilities for vertical and horizontal flipping.

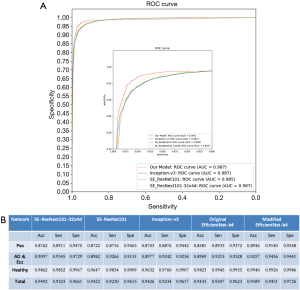

ROC curve

We draw ROC curves with sensitivity as x-axis and specificity as y-axis as shown in Figure 3A, which shows the average performance of the five-fold models on the entire dataset. ROC curves represent the relation between true positive rate (TPR) and false positive rate (FPR). The area under the raw current curves (AUC) is obtained to reflect the overall accuracy of each model. Though the AUC of these different model is similar, our model has advantage in high sensitivity range.

TPR = true positive/true positive + false negative = sensitivity

FPR = false positive/false positive + true negative = 1− specificity

Inference algorithm

The raw output of our classifiers (7 auxiliary classifiers plus 1 final classifier) is summarized by 3 classification neurons. And then argmax is used to find the most likely classification of the input image. The formula is as follows:

Where outputi is a 3-dimensional classification vector, and the sum of 8 classification vectors is the element-wise sum.

The formula of sensitivity, specificity and accuracy

Sensitivity = true positive/positive

Specificity = true negative/negative

Accuracy = (true positive + true negative)/(positive + negative)

In multi categories, sensitivity and accuracy is more important to show.

Confusion matrices

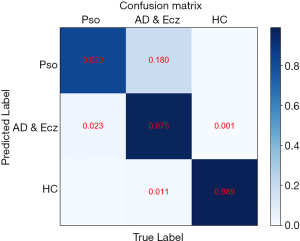

The confusion matrix of our method over the 3 classes of validation strategy. Confusion matrices for the CNN for the test task reveal that CNN confuses AD & Ecz with Pso.

Use of human subjects

All human studies were approved by the ethics committee of the Second Xiangya Hospital of Central South University (No. GF2017040177). All human subjects took our tests under informed consent. Written informed consent was obtained from the patients for publication of this study and any accompanying images. A copy of the written consent is available for review by the Editor-in-Chief of this journal.

Results

The preparation of artificial intelligence dermatology diagnosis assistant (AIDDA)

This platform, AIDDA (Figure 2A), has image-based diagnostic functionality based on a CNN model EfficientNet (https://github.com/lukemelas/EfficientNet-PyTorch.git). EfficientNets are a family of image classification models, developed based on AutoML and compound scaling. A simple yet higly effective compound scaling method is proposed to scale up a mobile-size baseline network for better performance while maintaining its efficiency. EfficientNets achieve state-of the art accuracy on ImageNet and five commonly used transfer learning datasets (13,14), yet having an order-of-magnitude fewer parameters and FLOPS than previous networks. Considering the speed-accuracy trade-off, we chose EfficientNet-b4 as backbone for this skin diseases classification assignment. As the experiment shows, it outperforms other ConvNets including SE-ResNeXt101-32x4d, SE-ResNet101 (15) and Inception-v3 (16).

In this study, we collected skin images from healthy people and patients with Pso, AD and Ecz (Figure 1, Table 1) from the Second Xiangya hospital, Central South University, China. The great majority of images were from typically exposed skin areas like the face and hands. Our dataset was composed of 4,740 dermatologist-labelled images in 3 categories, namely Pso, AD & Ecz, and healthy. We validated the effectiveness of the algorithm using five-fold cross-validation. Therefore, we splitted our dataset into five parts by case. Each part was a disjoint subset of whole dataset. We used stratified sample method to ensure that the proportions of samples in every category are similar to those in the origin dataset.

The selection of CNN models based on ROC analysis

The ROC curves of our model, Inception V3, SE_ResNet101, and SE_ResNeXt101-32x4d have been shown in Figure 3A. It shows the average performance of the five-fold models on the entire dataset. ROC curves represent the relation between TPR and FPR. The AUC is obtained to reflect the overall accuracy of each model. AUC for our model, Inception V3, SE_ResNet101, and SE_ResNeXt101-32x4d are 0.987, 0.987, 0.985 and 0.987, separately. The sensitivity and specificity for our model, Inception V3, SE_ResNet101, and SE_ResNeXt101-32x4d are 97.26% with 95.89%, 96.17% with 94.26%, 96.15% with 94.22%, and 96.61% with 94.92%. Though the AUC of these different model is similar, our model has advantage in high sensitivity and high specificity.

The performance of CNN on inflammatory disease diagnosis

The developed CNN model was used to perform diagnosis for each image among the 3 given categories. As a result, our model had an overall accuracy of 95.80%±0.09%, with the sensitivity of 94.40%±0.12% and specificity 97.20%±0.06% (mean ± SD). As shown in Figure 3B, CNN showed accuracy for Pso is 89.46%, with sensitivity of 91.4% and specificity of 95.48%, and accuracy for AD & Ecz 92.57%, with sensitivity of 94.56% and specificity of 94.41%. For healthy skin (HC), the accuracy is even higher. It was 99.40% with the sensitivity of 99.26% and specificity of 99.86%.

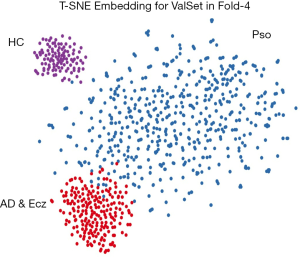

T-SNE analysis and confusion matrix

We also examined the internal features learned by the CNN using t-distributed stochastic neighbor embedding (T-SNE). In these visualizations, each point represents a skin lesion image from the validation set, and each cluster shows the similar clinical features which are belong to one of the 3 categories (Figure 4). The 3 easily distinguishable clusters clearly represent the 3 dermatologist-determined categories. We also present a confusion matrix as shown in Figure 5, where the developed model confused a little on AD & Ecz image with Pso (18% possibility).

Conclusions

AI based smartphone medical application is occurred in 2017 by Bain and his colleagues (17). They used a smartphone’s camera to visually identify the patients with schizophrenia, the drug and confirm ingestion via a neural network computer vision algorithm. Another example is in the field of diabetes, in which Shao and his colleagues applied smartphone to control engineered cells to maintain semiautomatic glucose homeostasis in diabetic mice (18), shedding a light on the application of smartphone platform in management of chronic diseases. Shared with the same convenience, smartphone platform in our study fasted the process of diagnosis within easy reach.

In this study, we demonstrate that deep learning can be effectively applied in dermatology outside of melanoma diagnosis. In particular, our work highlights that CNNs are able to differentiate amongst multiple diseases with skin conditions like Pso, AD & Ecz. Our AIDDA smartphone APP for this CNN is publicly available to all doctors in China, and more than 7,000 doctors have already registered this application. User data suggests that more than 100,000 doctor-taken images have been input into the app to help in the diagnosis process, so AIDDA is clearly already having an impact in the health system. This tool helps with diagnosis and can help prevent omission, and AIDDA should be especially helpful for inexperienced younger doctors and doctors in under-developed areas. Its ongoing application will continue to test the performance of this CNN in real-world practice, and we anticipate future improvements which should improve its diagnostic accuracy, selectivity, and specificity. Furthermore, AIDDA with results from biopsy and laboratory test may facilitate it as a more arcuate diagnostic tool, which has been proven in pediatric clinical study (19). Given that our system achieves a comparable or apparently superior performance to dermatologists for diagnosing inflammatory diseases, it seems obvious that smartphones enabled with deep learning network-developed APPs will continue to benefit doctors in real-world clinical practice in dermatological and likely many other types of human disorders.

Acknowledgments

Funding: This work was supported by the National Natural Science Foundation of China (No. 81830097, No. 81972943, No. 81861138016), the Key Research and Development Program of Hunan province (2018XK2304) and Hunan Talent Young Investigator (No. 2019RS2012).

Footnote

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/atm.2020.04.39). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. All human studies were approved by the ethics committee of the Second Xiangya Hospital of Central South University (No. GF2017040177). Written informed consent was obtained from the patients for publication of this study and any accompanying images.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Bi X, Wang H. Early Alzheimer's disease diagnosis based on EEG spectral images using deep learning. Neural Netw 2019;114:119-35. [Crossref] [PubMed]

- Brosch T, Tam R, Initiative for the Alzheimers Disease Neuroimaging. Manifold learning of brain MRIs by deep learning. Med Image Comput Comput Assist Interv 2013;16:633-40.

- Prasoon A, Petersen K, Igel C, Lauze F, Dam E, Nielsen M. Deep feature learning for knee cartilage segmentation using a triplanar convolutional neural network. Med Image Comput Comput Assist Interv 2013;16:246-53.

- Brosch T, Tang LY. Deep 3D Convolutional Encoder Networks With Shortcuts for Multiscale Feature Integration Applied to Multiple Sclerosis Lesion Segmentation. IEEE Trans Med Imaging 2016;35:1229-39. [Crossref] [PubMed]

- Cheng JZ, Ni D, Chou YH, et al. Computer-Aided Diagnosis with Deep Learning Architecture: Applications to Breast Lesions in US Images and Pulmonary Nodules in CT Scans. Sci Rep 2016;6:24454. [Crossref] [PubMed]

- Gulshan V, Peng L, Coram M, et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016;316:2402-10. [Crossref] [PubMed]

- Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017;542:115-8. [Crossref] [PubMed]

- Haenssle HA, Fink C, Schneiderbauer R, et al. Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann Oncol 2018;29:1836-42. [Crossref] [PubMed]

- Masood A, Al-Jumaily AA. Computer aided diagnostic support system for skin cancer: a review of techniques and algorithms. Int J Biomed Imaging 2013;2013:323268. [Crossref] [PubMed]

- Burroni M, Corona R, Dell'Eva G, et al. Melanoma computer-aided diagnosis: reliability and feasibility study. Clin Cancer Res 2004;10:1881-6. [Crossref] [PubMed]

- Erickson BJ. Machine Learning: Discovering the Future of Medical Imaging. J Digit Imaging 2017;30:391. [Crossref] [PubMed]

- Fishbein AB, Silverberg JI, Wilson EJ, et al. Update on Atopic Dermatitis: Diagnosis, Severity Assessment, and Treatment Selection. J Allergy Clin Immunol Pract 2020;8:91-101. [Crossref] [PubMed]

- Tan M, Le Quoc V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. ICML, 2019.

- Russakovsky O, Deng J, Su H, et al. Imagenet large scale visual recognition challenge. Int J Comput Vis 2015;115:211-52. [Crossref]

- Hu J, Shen L, Albanie S, et al. Squeeze-and-Excitation Networks. Proceedings of the IEEE conference on computer vision and pattern recognition 2018:7132-41.

- Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision. Proceedings of the IEEE conference on computer vision and pattern recognition 2016:2818-26.

- Bain EE, Shafner L, Walling DP, et al. Use of a Novel Artificial Intelligence Platform on Mobile Devices to Assess Dosing Compliance in a Phase 2 Clinical Trial in Subjects With Schizophrenia. JMIR Mhealth Uhealth 2017;5:e18. [Crossref] [PubMed]

- Shao J, Xue S, Yu G, et al. Smartphone-controlled optogenetically engineered cells enable semiautomatic glucose homeostasis in diabetic mice. Sci Transl Med 2017. [Crossref] [PubMed]

- Liang H, Tsui BY, Ni H, et al. Evaluation and accurate diagnoses of pediatric diseases using artificial intelligence. Nat Med 2019;25:433-8. [Crossref] [PubMed]