Automatic classification of heterogeneous slit-illumination images using an ensemble of cost-sensitive convolutional neural networks

Introduction

Optical imaging technologies play a vital role in the clinical diagnosis and treatment of ophthalmology (1,2). Computational vision approaches for automatic diagnosis of lens opacity have greatly improved the efficiency of ophthalmologists and the entire treatment chain, providing real benefits for patients (3-6). In our previous studies, we applied artificial intelligence methods to detect cataract and then graded lens opacity based on diffuse-light ocular images (7-9). However, the lens opacity grading is solely based on diffuse-light images, which may not be precise as the lens is a three-dimensional object (10-12). The common slit-illumination image offers another effective diagnosis medium and provides an essential supplement to these diffuse-light images (13,14). Therefore, the development of computer vision techniques for slit-illumination images will move the automatic diagnosis of ophthalmic diseases towards a more comprehensive and intelligent strategy.

At present, the existing computer-aided diagnosis methods generally focus on senile cataracts using slit-illumination images (3-5,15). Thresholding localization and support vector regression methods were used to grade the nuclear cataract (16). Recursive convolutional neural networks (CNNs) and support vector regression methods were implemented to enable automatic learning of features for evaluating the severity of nuclear cataracts (17). However, the phenotypes of senile cataracts are relatively simple and fairly homogeneous. The study of such senile cataracts alone will not be sufficient for the development of a computer-aided diagnosis system for lens opacity in complex clinical scenarios. Practical clinical applications need the ability to diagnose heterogeneous lens opacities with high recognition rates (18-20). It is therefore essential to develop an efficient, feasible, and automatic diagnostic system to address heterogeneous slit-illumination images.

The pediatric cataract is a typical lens opacity disease that suffers from severe heterogeneity and complex phenotypes (21-23). Large-scale slit-illumination images of pediatric cataracts were collected from the long-term Childhood Cataract Program of the Chinese Ministry of Health (CCPMOH) project (24), which covered a wide variety of lens opacities. In addition, the imbalance between the categories is an inevitable problem in pediatric cataract diagnosis (21,25), where the number of positive samples is relatively smaller than the number of negative samples. This can easily cause the classifiers to produce a higher false-negative rate. Therefore, these datasets represent an ideal medium for the exploration of the appropriate computational vision methods required to adapt to complex clinical application scenarios.

Recently, CNNs (26-28) and ensemble learning methods (29-32) based on CNNs showed great promise in the automatic diagnosis of extensive diseases based on medical images, among which, the voting, averaging, and batch random selection were common ensemble techniques. To develop an effective and efficient computer vision method for analysis of these heterogeneous slit-illumination images, we integrated three deep CNNs with different structures (AlexNet, GoogLeNet and ResNet50) (26-28) and a cost-sensitive algorithm (33,34) into an ensemble learning framework and created the CCNN-Ensemble model (ensemble of cost-sensitive CNNs). The three CNNs with their different structures were used to improve both the overall recognition rate and stability of the model. The cost-sensitive algorithm was used to address the imbalanced dataset problem and thus significantly reduce the model’s false-negative rate. We performed detailed experiments to compare the performance of the CCNN-Ensemble method with that of conventional methods in three grading indices of lens opacity. We also used two external datasets (an independent testing dataset and an Internet-based dataset) to validate the method’s versatility and stability. Finally, potential computer-aided diagnostic software was developed and deployed for use by ophthalmologists and their patients in clinical applications.

We present the following article in accordance with the STARD reporting checklist (available at http://dx.doi.org/10.21037/atm-20-6635).

Methods

Dataset

The slit-illumination datasets consist of the following three parts: the training and validation dataset, the independent testing dataset, and the Internet-based dataset. A total of 470 training and validation datasets were derived from the routine examinations between June 2015 and February 2020 at Zhongshan Ophthalmic Center of Sun Yat-sen University (Figure 1A) (24). 132 independent testing images were selected randomly in advance from the Zhongshan Ophthalmic Center; 79 Internet-based images were collected using a keyword search (including words such as congenital cataract, infant, and pediatric) of the Baidu and Google search engines. In total, there were 470 individuals in the training and validation datasets and 132 individuals in the independent testing dataset. All individuals underwent the examination of slit lamp-adapted anterior segmental photography (BX900; Haag-Streit AG, Koniz, Switzerland). The slit-beam width was settled in a narrow range (1 to 2 mm). The age of the subjects in training, validation, and independent testing datasets is 18.96±10.61 months (mean ± SD). The study was approved by the Ethics Committee of Zhongshan Ophthalmic Center of Sun Yat-sen University (NO.: 2017KYPJ096) and adhered to the tenets of the Declaration of Helsinki (as revised in 2013). Written informed consent was obtained from all the study participants’ parents or legal guardians.

There are no special pixel requirements for the enrolled images provided that the lens area of the image is retained. To ensure grade labeling accuracy, three senior ophthalmologists jointly determine the grade of each image and comprehensively evaluate its severities in terms of three lens lesion indices (opacity area, density, and location) (7,9). An opacity area that covers more than half of the pupil is defined as extensive; otherwise, it is defined as limited. An opacity density that completely blocks the light is labelled as dense; otherwise, it is defined as transparent. An opacity location that fully covers the visual axis of the pupil is called central; otherwise, it is called peripheral. The collected datasets covered a variety of pediatric cataracts, which were divided into limited and extensive categories for the area, dense and transparent categories for density, and central and peripheral categories for location, as shown in Table 1.

Full table

Preprocessing and model evaluation

We preprocessed all labeled datasets using twice-applied Canny detection and Hough transformation (35,36) to acquire the lens region of interest and eliminate surrounding noise zones such as the eyelids and the sclera (Figure 1A). The detailed procedures and methods of automatic lens cropping are consistent with our previous research (7,9). The localized images were subsequently resized to a size of 256×256 pixels and were then input into the computational vision models. Using these training and validation datasets, we performed a five-fold cross-validation procedure to compare and evaluate the performances of the different models (Figure 1B). Four representative handcrafted features (WT: wavelet transformation; LBP: local binary pattern; SIFT: scale-invariant feature transform; and COTE: color and texture features) (8,9,37-39) were selected and combined with support vector machine (SVM) and adaptive boosting (Adaboost) classifiers for performance comparison. In addition, three single-classifier CNNs (AlexNet, GoogLeNet, and ResNet50) and two common ensemble learning methods (Ave-Ensemble and Ave-BRS-3ResNet) based on CNNs were performed to compare with CCNN-Ensemble. The Ave-Ensemble represents an ensemble learning with an averaging technique, which calculates the averages of the probabilities for AlexNet, GoogLeNet, and ResNet50 to obtain the final classification result. The Ave-BRS-3ResNet denotes the ensemble learning of three ResNet50 architectures with batch random selection and averaging techniques. After the selection of the optimal CCNN-Ensemble model, we further verified its effectiveness and stability using the two external datasets (the independent testing dataset and the Internet-based dataset).

Statistical analysis

To provide a full assessment of the superiority of the CCNN-Ensemble method when compared with the conventional methods, we calculated several evaluation metrics, including accuracy, sensitivity, specificity, F1-measure, and G-mean, as follows.

where TP, FP, TN, and FN denote the numbers of true positives, false positives, true negatives, and false negatives, respectively. Accuracy, sensitivity, and specificity are the most commonly used evaluation measures. The F1-measure and G-mean (40) indicators simultaneously consider the accuracies of both classes and can thus effectively measure the recognition abilities of models in the case of an imbalanced dataset. Additionally, three more vital objective measures—the receiver operating characteristic curve (ROC), the area under the ROC curve (AUC), and the precision recall curve (PR)—were used for visual comparison and analysis. Five-fold cross-validation was applied to calculate the mean and standard deviation of the above evaluation metrics. All statistical analyses were conducted using python 3.7.8.

Overall framework of CCNN-Ensemble

As shown in Figure 2, the overall diagnosis framework of the CCNN-Ensemble consists primarily of three deep CNN models (GoogLeNet, AlexNet, and ResNet50), a cost-sensitive adjustment layer, ensemble learning, dataset augmentation technology, and transfer learning. The three heterogeneous CNN models, as classifiers, were employed to construct the ensemble learning framework to enhance the recognition rates of the algorithms. The cost-sensitive adjustment layer was used to manage the imbalanced dataset problem, and the dataset augmentation and transfer learning processes were adopted to overcome the overfitting problem and accelerate model convergence. The technical details are described below.

Ensemble learning of multiple heterogeneous CNNs

We used three heterogeneous CNNs (AlexNet, GoogLeNet and ResNet50) to form the ensemble learning framework (Figure 2). The AlexNet CNN, which was proposed by Krizhevsky (26), performed image classification and won first prize in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012, mainly used convolutional layers, overlapping pooling, nonsaturating rectified linear units (ReLUs) and three fully-connected layers to construct an eight-layer CNN. Subsequently, a number of variants CNNs were proposed to enhance its recognition rate and incorporated many emerging technologies. In particular, a 22-layer inception deep network was achieved by Google researchers (27) that were based on the Hebbian principle, an intuition of multi-scale processing, filter aggregation, average pooling, and auxiliary classifier technologies. Kaiming He then used the residual connection scheme, batch normalization, and scale operations to establish a 50-layer ultra-deep residual CNN (ResNet50) (28). Because the above CNNs implemented different principles and techniques, their network structures show distinct heterogeneity, and this can effectively improve the recognition rate of the ensemble learning model.

In order to adequately utilize the advantages of the three CNNs, we implemented a two-stage ensemble learning scheme. Specifically, in the first stage, starting with the initial parameters of models pre-trained on the ImageNet dataset, three CNNs with different structures were trained using transfer learning, respectively. Thus, the optimal parameters of each CNN were obtained. In the second stage, the Softmax functions of the three CNNs were removed, the high-level features of the CNNs were merged into the same cost-sensitive Softmax classification function to construct a unified ensemble CNN. The learning rate of the feature extraction layers was set to one-tenth of the ensemble learning layer. The transfer learning method was adopted to fully train the ensemble learning layer and fine-tune the previous feature extraction layers. Through the above two-stage ensemble learning scheme, three different types of CNNs can complement their shortcomings, which is beneficial to improve the overall performance of intelligent diagnosis for pediatric cataract.

Transfer learning

Because the number of medical images is very small, the fully-trained deep learning system cannot adequately optimize the millions of trainable parameters from scratch and this can easily lead to overfitting. Transfer learning (41,42) is a critical technology for application to such small datasets that allows the model to be trained from a better starting point and uses the color, texture, and shape characteristics that have been learned from natural images. Fine-tuning allowed the final trained CNN model to obtain the unique features of the ophthalmic images and also overcame the overfitting problem. Additionally, data augmentation methods, including transformed images and horizontal reflections (26,43), were adopted to accelerate the convergence of the models.

Cost-sensitive method and optimization process

To address the imbalanced dataset problem of the slit-illumination images effectively, the cost-sensitive approach (33,34,44) was adopted to adjust the cost-sensitive weight of the positive samples in the loss function (Figure 2). Specifically, we discriminatively determined the cost of misclassification of the different classes and assigned a larger cost-sensitive weight to the positive class. For one iterative training stage, n samples were selected at random to form a training dataset {[x(1), y(1)], [x(2), y(2)],...,[x(n), y(n)]}, where

and . Here, x(i) denotes the features of the i-th sample and y(i) is the category label. The cost-sensitive loss function can be expressed as shown in Eq. [7].where n, m, k and θ denote the number of training samples, the number of input neurons, the number of classes, and trainable parameters, respectively. I {y(i) = j} represents the indicator function (I {y(i)is equal to j} =1 and I {y(i)is not equal to j} =0) while CS {y(i) = positive class} is the cost-sensitive weight function (CS {y(i)is the positive class lable} = C and CS {y(i)is the negative class lable} =1). Using a grid-search procedure, we determined that the value of the effective cost-sensitive weight parameter C was within the interval [4–6].

is a weight decay term that is applied to penalize the larger trainable weights. To obtain the optimal trainable weights θ* (see Eq. [8]), we needed to minimize F(θ) using mini-batch gradient descent (Mini-batch-GD) (45) as shown in Eq. [9].Visualization heatmaps

To verify the reasonability and effectiveness of the CCNN-Ensemble, the Gradient-weighted Class Activation Mapping (Grad-CAM) (46) visualization technique was employed to generate the heatmaps for highlighting the disease-related regions on which the diagnosis model focused most. The Grad-CAM is an explainable technique for CNN-based models, which utilized the gradients of any target concept flowing into last convolutional layer to produce a localization map highlighting remarkable regions in an image for predicting the concept.

Experimental environment

In this study, we implemented dataset preprocessing, automatic lens region of interest (ROI) localization, conventional feature extraction, the SVM and Adaboost classifiers, and uniform dataset partitioning for cross-validation using MATLAB R2014a (8,9). The training, validation, and testing procedures of three single-classifier CNNs (AlexNet, GoogleNet, and ResNet50) and three ensemble learning methods were all performed in parallel using eight Nvidia Titan X graphics processing units (GPUs) based on the Caffe toolbox (47) in the Ubuntu 16.4 OS. For a fair comparison, after automatically cropping the lens region, all images were resized to 256×256 pixels and input into the three single-classifier CNNs and ensemble learning methods. The initial learning rate was set at 0.001 and successively reduced by one tenth of the original value after every 500 iterations; a total of 2,000 iterations were performed. We set the mini-batch size to 32 on one GPU and used eight GPUs; we thus acquired a total of 256 samples in every iteration and calculated the average value of these samples to update the trainable parameters. Appropriate settings for these parameters can ensure better performance and rapid convergence for the CCNN-Ensemble method.

Results

To achieve an effective solution to assist in the diagnosis of pediatric cataracts using slit-illumination images, we explored five different models, including four conventional features, four Adaboost ensemble methods, three single-classifier CNNs, two conventional ensemble learning based on CNNs, and the CCNN-Ensemble method. First, we trained and compared the performances of these methods on the training and validation datasets to obtain the optimal CCNN-Ensemble method. Then, we used two external datasets to provide further evaluation of the robustness and the clinical effectiveness of the CCNN-Ensemble. Finally, we developed and deployed cloud-based software to serve patients that were located in remote areas.

Performance comparison of CCNN-Ensemble with conventional features and Adaboost ensemble methods

After application of the five-fold cross-validation (48), we compared the performances of the nine intelligent algorithms for the lens opacity in terms of the three grading indices (opacity area, density, and location). We calculated three main indicators—accuracy (ACC), specificity (SPE), and sensitivity (SEN) (Figure 3)— along with more detailed test results with means and standard deviations (Table 2 and Tables S1,S2). First, when using the conventional feature methods, both the ACC and SEN indicators are low; for example, the SEN of the LBP method is less than 70% for all grading indices. Second, after the application of the Adaboost ensemble learning methods, the SEN indicator is greatly improved, whereas the value of the SPE indicator is reduced. As a result, the ACC is almost equal to the performance of the conventional feature methods (Figure 3). Notably, the SEN of the SIFT method increased from 76.41% to 84.62%, whereas the SPE decreased from 76.73% to 65.45% for opacity area grading (Figure 3 and Table 2); the SEN of the LBP method increased from 68.88% to 81.10%, whereas the SPE again decreased from 80.27% to 73.34% for opacity location grading (Figure 3 and Table S2). The comparison results for the other feature methods and the Adaboost ensemble learning methods are also similar. Third, the CCNN-Ensemble method provided significantly improved recognition rates for all grading indices (Figure 3). All the average ACCs were maintained at 92% or more, while both the SPE and the SEN were satisfactory for the grading opacity area (92.00% and 92.31%), the opacity density (93.85% and 91.43%), and the opacity location (95.25% and 89.29%). Similarly, the F1-measure, G-mean, and AUC indicators also showed values of more than 90% (Table 2 and Tables S1,S2).

Full table

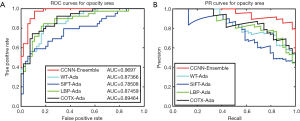

Additionally, we used the ROC and PR curves to compare the performances of the above methods (Figure 4, Figures S1,S2). The ROC curve of the CCNN-Ensemble is close to the upper-left area of the graph and the PR curve shows a similar performance. All the AUC indicators were maintained at more than 0.969 for the three grading indices. This indicates that the CCNN-Ensemble method is superior to conventional features and Adaboost ensemble learning methods.

Performance comparison of CCNN-Ensemble with single-classifier CNNs and conventional ensemble learning based on CNNs

To further verify the superiority of the CCNN-Ensemble method, we conducted comparative experiments including three ensemble learning methods (Ave-Ensemble, Ave-BRS-3ResNet, and CCNN-Ensemble) and three single-classifier CNNs (AlexNet, GoogLeNet, and ResNet50). Detailed results of three grading indices of lens opacity (opacity area, density, and localization) were shown in Table 3 and Supplementary Tables S3,S4. From the results of opacity area grading, we had three meaningful conclusions. First, the performance of three ensemble learning methods was superior to those of the three single-classifier CNNs. Compared with the best single-classifier ResNet50, the accuracy, specificity, and sensitivity of the CCNN-Ensemble were improved by 3.45%, 2.54%, and 4.62%, respectively. Second, the performance of the Ave-Ensemble and the Ave-BRS-3ResNet is comparable. Third, the performance of the CCNN-Ensemble was superior to those of the Ave-Ensemble and the Ave-BRS-3ResNet methods. It was worth to note that the sensitivity of the CCNN-Ensemble was improved by 3.08% when compared to that of the Ave-Ensemble method. Similar conclusions were obtained on the grading of opacity density and location (Tables S3,S4).

Full table

Performance in independent testing dataset

To ensure an adequate investigation of the generalizability and the effectiveness of the CCNN-Ensemble method, we used an independent testing dataset for further validation of the proposed method. A total of 132 slit-illumination images were selected randomly in advance from the Zhongshan Ophthalmic Center (details are given in the Methods section). Using the expert group’s decisions for reference, we presented detailed quantitative evaluation results (as shown in Table 4) and performance comparison (Figure 5A). We also reported the ROC and PR curves for the three grading indices: opacity area, density, and location (Figure 5A). The experimental results indicated that the performance of the CCNN-Ensemble method on the independent testing dataset is almost equal to that of the validation dataset, with the ACC and the SPE being maintained at more than 93% and 94%, respectively, and the SEN values are 90.24%, 89.29% and 90.63% for the opacity grading area, density, and location, respectively.

Full table

Performance in Internet-based dataset

In addition, we also collected 79 slit-illumination images from the Internet (details are given in the Methods section). While the quality of these images varied significantly, the CCNN-Ensemble was still able to detect the appropriate cases with a higher recognition rate. In the same manner, we obtained detailed prediction results (given in Table 4), intuitive comparison graphs for the main indicators (ACC, SPE, and SEN), the ROC curve, and the PR curve (Figure 5B). Specifically, the CCNN-Ensemble method also offered satisfactory accuracy, specificity, and sensitivity in terms of opacity area (89.87%, 89.47%, and 90.00%), opacity density (88.61%, 88.89%, and 88.52%), and opacity location (87.34%, 87.50%, and 87.30%), respectively.

Interpretability analysis of CCNN-Ensemble for opacity area grading

Using the Grad-CAM technique, three heatmaps were obtained simultaneously via the CCNN-Ensemble, which were associated with the Alexnet, GoogLeNet, and ResNet50, respectively. In the independent testing dataset, four representative slit-illumination images of opacity area grading and their heatmaps are displayed in Figure 6. The highlighted colors in the heatmap indicate the opacity areas on which the network was based to make a decision.

Web-based software

To serve both patients and ophthalmologists located in remote areas, we developed and deployed an automatic diagnosis software based on cloud service (http://www.cc-cruiser.com:5007/SignIn), which included user registration, an image upload module, a prediction module, regular re-examinations, sample downloads, and instructions. For evaluation and trial, we provided a test user (ws) and its password (ws) of the diagnosis software. Before using the website for diagnosis, the users needed to submit personal information including age, gender, and telephone number to complete the registration process. This registration process allowed the doctor to contact patients who were diagnosed with serious conditions, and also prevented the illegal use of our software. After registration, either the patient or the ophthalmologist can upload the slit-illumination images for diagnosis; the software can then perform image preprocessing, make three grading predictions, and provide a final treatment recommendation. Our software can diagnose multiple images simultaneously. A total of 30 sample images were available for download, and our e-mail address and telephone number were also provided for all registered patients.

Discussion

The inferior performance of conventional feature methods when applied to diagnosis using the slit-illumination images is mainly attributed to the following two causes. First, the conventional feature methods use handcrafted descriptors to represent the original images, which are completely reliant on the designer’s experience and operator techniques, and which cannot learn statistical features from the existing large dataset. Second, the conventional feature methods and the SVM classifier do not take the problem of the imbalanced dataset into account, and this results in the final predictions being biased towards the majority class and ignoring the minority class (i.e., the positive samples). Therefore, these methods lead to inferior overall accuracy and lower sensitivity.

The Adaboost ensemble learning methods led to moderate improvement of the recognition rates when compared with the conventional feature methods because they train and apply multiple classifiers jointly to determine the final grading results. Simultaneously, an under-sampling method is incorporated into Adaboost to address the imbalanced dataset. Therefore, the sensitivity of the methods is greatly enhanced, but this improvement leads to the reduction of the specificity. The overall accuracy rate is almost equal to that obtained when using the conventional feature methods alone.

The CCNN-Ensemble method is significantly superior to the above methods in terms of all grading indices, which was attributed to the following four improvements. First, the CCNN-Ensemble method does not need to design any feature descriptor manually because it learns high-level and statistical features directly from the original images. Second, we use three different CNNs for ensemble learning, so that they can learn the different characteristics from three different perspectives to enable joint determination of the final prediction. This ensemble of multiple CNN technologies is beneficial in enhancing the overall performance. Third, the cost-sensitive approach is integrated into the CCNN-Ensemble method and takes greater account of the minority class to ensure that the sensitivity indicator is valid for the imbalanced dataset. In addition, transfer learning is applied to our model to enable fine-tuning of the trainable parameters from a better starting point, thus making it easier to jump out from the local minimum. As a result, the higher accuracy and specificity performances are maintained while the sensitivity is also greatly enhanced.

The performance of three ensemble learning methods was superior to those of the three single-classifier CNNs. The reason is that multiple classifiers in the ensemble learning methods are complementary to each other and their advantages are fully utilized, thereby improving the performance of a single classifier. Compared with the conventional ensemble learning methods, the CCNN-Ensemble method combines three heterogeneous CNNs, and performs a two-stage transfer learning to fully optimize the parameters of the three networks, thereby further enhancing the performance of ensemble learning. In addition, by analyzing the heatmap shown in Figure 6, it finds that all these three classifiers can capture the location of lens opacity, although the highlighted areas are slightly different. The Grad-CAM technique further corroborates the effectiveness of the proposed diagnosis system. Interpretability analysis of heatmap provides strong evidence for the acceptance of our CCNN-Ensemble in ophthalmic clinics.

The CCNN-Ensemble method also demonstrated better performance on two external datasets, and their recognition rates were almost equal to that of the validation dataset. This indicates that the proposed approach is insensitive to different data sources, and its generalizability and robustness are better than those of the conventional methods. These experimental conclusions provide sufficient evidence to justify the application of the CCNN-Ensemble method in complex clinical scenarios.

Based on our proposed method, automated diagnostic software was developed and deployed to serve patients and ophthalmologists remotely in the form of a cloud service, which provided important clinical value for pediatric cataract diagnosis. By accessing our automatic diagnostic software remotely, any patient can upload slit-illumination images and can then quickly obtain prediction results and an appropriate treatment recommendation. Therefore, this remotely-aided diagnosis method avoided doctors from performing tedious examinations and helped patients located in remote areas. In addition, this work can also provide a teaching role for junior doctors.

However, several limitations of this study should be mentioned. First, multiple CNNs with different structures are integrated into the architecture. Although the strategy of ensemble learning significantly improves the accuracy, it is slightly less cost-effective due to the high requirement of the computing resource than a single CNN model. Second, our model is solely based on the slit-illumination image, which is insufficient to identify the lens opacity in occasional situations. Combining the electronic medical records and other optical images may provide valuable supplements for the comprehensive assessment of lens opacity. Third, the robustness and stability of our method are required to be verified before the further generalization of other medical situations. Despite the above limitations, this study provides a practical strategy for heterogeneous lens opacity diagnosis with promising performance validated in multi-source datasets. Further studies with the integration of electronic medical records and more optical images will pave the way for the wide-range clinical application of our work.

Conclusions

In this paper, we proposed a feasible and automated CCNN-Ensemble method for the effective diagnosis of pediatric cataracts using heterogeneous slit-illumination images. We integrated three deep CNNs and cost-sensitive technology to construct an ensemble learning method that could identify the severity of lens opacity based on three grading indices. The experimental results and comparison analyses verified that the proposed method is superior to other conventional methods. The performance of the CCNN-Ensemble method on two external datasets indicated its improved robustness and generalizability. Finally, a set of cloud-based automatic diagnostic software was produced for use by both patients and ophthalmologists. This research could provide a helpful reference for the analysis of other medical images and will help to promote the use of artificial intelligence techniques in clinical applications.

Acknowledgments

Funding: This study was funded by the National Key R&D Program of China (2018YFC0116500), the National Natural Science Foundation of China (81770967), the National Natural Science Fund for Distinguished Young Scholars (81822010), the Science and Technology Planning Projects of Guangdong Province (2018B010109008, 2019B030316012), and the Fundamental Research Funds for the Central Universities (JBX180704). The sponsor or funding organization had no role in the design or conduct of this research.

Footnote

Reporting Checklist: The authors have completed the STARD reporting checklist. Available at http://dx.doi.org/10.21037/atm-20-6635

Data Sharing Statement: Available at http://dx.doi.org/10.21037/atm-20-6635

Peer Review File: Available at http://dx.doi.org/10.21037/atm-20-6635

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/atm-20-6635). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by the Institutional Review Board of Zhongshan Ophthalmic Center of Sun Yat-sen University (No.: 2017KYPJ096) and written informed consent was obtained from all the study participants’ parents or legal guardians.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Bernardes R, Serranho P, Lobo C. Digital ocular fundus imaging: a review. Ophthalmologica 2011;226:161-81. [Crossref] [PubMed]

- Ng EY, Acharya UR, Suri JS, et al. Image Analysis and Modeling in Ophthalmology. CRC Press, 2014.

- Zhang Z, Srivastava R, Liu H, et al. A survey on computer aided diagnosis for ocular diseases. BMC Med Inform Decis Mak 2014;14:80. [Crossref] [PubMed]

- Ting DSW, Pasquale LR, Peng L, et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol 2019;103:167-75. [Crossref] [PubMed]

- Armstrong GW, Lorch AC A. (eye): A Review of Current Applications of Artificial Intelligence and Machine Learning in Ophthalmology. Int Ophthalmol Clin 2020;60:57-71. [Crossref] [PubMed]

- Hogarty DT, Mackey DA, Hewitt AW. Current state and future prospects of artificial intelligence in ophthalmology: a review. Clin Exp Ophthalmol 2019;47:128-39. [Crossref] [PubMed]

- Long E, Lin H, Liu Z, et al. An artificial intelligence platform for the multihospital collaborative management of congenital cataracts. Nat Biomed Eng 2017;1:0024.

- Wang L, Zhang K, Liu X, et al. Comparative analysis of image classification methods for automatic diagnosis of ophthalmic images. Sci Rep 2017;7:41545. [Crossref] [PubMed]

- Liu X, Jiang J, Zhang K, et al. Localization and diagnosis framework for pediatric cataracts based on slit-lamp images using deep features of a convolutional neural network. PloS One 2017;12:e0168606 [Crossref] [PubMed]

- Klein BEK, Klein R, Linton KLP, et al. Assessment of cataracts from photographs in the Beaver Dam Eye Study. Ophthalmology 1990;97:1428-33. [Crossref] [PubMed]

- Reid JE, Eaton E. Artificial intelligence for pediatric ophthalmology. Curr Opin Ophthalmol 2019;30:337-46. [Crossref] [PubMed]

- Lin H, Li R, Liu Z, et al. Diagnostic efficacy and therapeutic decision-making capacity of an artificial intelligence platform for childhood cataracts in eye clinics: a multicentre randomized controlled trial. EClinicalMedicine 2019;9:52-9. [Crossref] [PubMed]

- Chylack LT Jr, Wolfe JK, Singer DM, et al. The Lens Opacities Classification System III. The Longitudinal Study of Cataract Study Group. Arch Ophthalmol 1993;111:831-6. [Crossref] [PubMed]

- Kumar S, Yogesan K, Constable I. Telemedical diagnosis of anterior segment eye diseases: validation of digital slit-lamp still images. Eye 2009;23:652-60. [Crossref] [PubMed]

- Kolhe S, Guru MSK. Cataract Classification and Grading: A Survey. Int J Innov Res Comput Commun Eng 2015;3:10749-55.

- Li H, Lim JH, Liu J, et al. A Computer-Aided Diagnosis System of Nuclear Cataract. IEEE Trans Biomed Eng 2010;57:1690-8. [Crossref] [PubMed]

- Gao X, Lin S, Wong TY. Automatic feature learning to grade nuclear cataracts based on deep learning. IEEE Trans Biomed Eng 2015;62:2693-701. [Crossref] [PubMed]

- Amaya L, Taylor D, Russell-Eggitt I, et al. The morphology and natural history of childhood cataracts. Surv Ophthalmol 2003;48:125-44. [Crossref] [PubMed]

- Wu X, Long E, Lin H, et al. Prevalence and epidemiological characteristics of congenital cataract: a systematic review and meta-analysis. Sci Rep 2016;6:28564. [Crossref] [PubMed]

- Medsinge A, Nischal KK. Pediatric cataract: challenges and future directions. Clin Ophthalmol 2015;9:77. [PubMed]

- Lenhart PD, Courtright P, Wilson ME, et al. Global challenges in the management of congenital cataract: proceedings of the 4th International Congenital Cataract Symposium held on March 7, 2014, New York, New York. J AAPOS 2015;19:e1-8. [Crossref] [PubMed]

- Ellis FJ. Management of pediatric cataract and lens opacities. Curr Opin Ophthalmol 2002;13:33-7. [Crossref] [PubMed]

- Wilson ME, Trivedi RH, Pandey SK. Pediatric cataract surgery: techniques, complications, and management. Lippincott Williams & Wilkins, 2005.

- Lin H, Long E, Chen W, et al. Documenting rare disease data in China. Science 2015;349:1064. [Crossref] [PubMed]

- Chen W, Long E, Chen J, et al. Timing and approaches in congenital cataract surgery: a randomised controlled trial. Lancet 2016;388:S52. [Crossref]

- Krizhevsky A, Sutskever I, Hinton GE. ImageNet Classification with Deep Convolutional Neural Networks. Adv Neural Inf Process Syst 2012;25:1097-105.

- Szegedy C, Liu W, Jia Y, et al. Going deeper with convolutions. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA: 2015;1-9.

- He K, Zhang X, Ren S, et al. Deep residual learning for image recognition. arXiv preprint arXiv:151203385 2015.

- Kumar A, Kim J, Lyndon D, et al. An ensemble of fine-tuned convolutional neural networks for medical image classification. IEEE J Biomed Health Inform 2017;21:31-40. [Crossref] [PubMed]

- Rasti R, Teshnehlab M, Phung SL. Breast cancer diagnosis in DCE-MRI using mixture ensemble of convolutional neural networks. Pattern Recognition 2017;72:381-90. [Crossref]

- Bermejo-Peláez D, Ash SY, Washko GR, et al. Classification of interstitial lung abnormality patterns with an ensemble of deep convolutional neural networks. Sci Rep 2020;10:338. [Crossref] [PubMed]

- Chen H, Dou Q, Wang X, et al., editors. Mitosis detection in breast cancer histology images via deep cascaded networks. Thirtieth AAAI conference on artificial intelligence; 2016.

- Ali S, Majid A, Javed SG, et al. Can-CSC-GBE: Developing cost-sensitive classifier with gentleboost ensemble for breast cancer classification using protein amino acids and imbalanced data. Comput Biol Med 2016;73:38-46. [Crossref] [PubMed]

- Zhou ZH, Liu XY. Training cost-sensitive neural networks with methods addressing the class imbalance problem. IEEE Trans Knowl Data Eng 2006;18:63-77. [Crossref]

- Daugman J. New methods in iris recognition. IEEE Trans Syst Man Cybern B Cybern 2007;37:1167-75. [Crossref] [PubMed]

- Masek L. Recognition of human iris patterns for biometric identification. The University of Western Australia, 2003.

- Yang JJ, Li J, Shen R, et al. Exploiting ensemble learning for automatic cataract detection and grading. Comput Methods Programs Biomed 2016;124:45-57. [Crossref] [PubMed]

- Guo L, Yang J-J, Peng L, et al. A computer-aided healthcare system for cataract classification and grading based on fundus image analysis. Comput Ind 2015;69:72-80. [Crossref]

- Huang W, Chan KL, Li H, et al. A Computer Assisted Method for Nuclear Cataract Grading From Slit-Lamp Images Using Ranking. IEEE Trans Med Imaging 2011;30:94-107. [Crossref] [PubMed]

- Tang Y, Zhang YQ, Chawla NV, et al. SVMs modeling for highly imbalanced classification. IEEE Trans Syst Man Cybern B Cybern 2009;39:281-8. [Crossref] [PubMed]

- Shin HC, Roth HR, Gao M, et al. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans Med Imaging 2016;35:1285-98. [Crossref] [PubMed]

- Ravishankar H, Sudhakar P, Venkataramani R, et al. Understanding the Mechanisms of Deep Transfer Learning for Medical Images. arXiv preprint arXiv:170406040.

- Cireşan D, Meier U, Masci J, et al. Multi-column deep neural network for traffic sign classification. Neural Netw 2012;32:333-8. [Crossref] [PubMed]

- Krawczyk B, Schaefer G, Woźniak M. A hybrid cost-sensitive ensemble for imbalanced breast thermogram classification. Artif Intell Med 2015;65:219-27. [Crossref] [PubMed]

- Bottou L. Large-scale machine learning with stochastic gradient descent. In: Lechevallier Y, Saporta G. editors. Proceedings of COMPSTAT'2010. Physica-Verlag HD. doi:

10.1007/978-3-7908-2604-3_16 . - Selvaraju RR, Cogswell M, Das A, et al. editors. Grad-cam: Visual explanations from deep networks via gradient-based localization. Proceedings of the IEEE international conference on computer vision, 2017.

- Jia Y, Shelhamer E, Donahue J, et al. 'Caffe: convolutional architecture for fast feature embedding', arXiv preprint arXiv:14085093, 2014.

- Kohavi R. A study of cross-validation and bootstrap for accuracy estimation and model selection. International Joint Conference on Artificial Intelligence 1995;14:1137-45.