Application of artificial intelligence in gastrointestinal disease: a narrative review

Introduction

In the 1950s, the concept of artificial intelligence (AI) was first proposed at the Dartmouth Conference, with the aim to create complex machines that simulate cognitive traits of the working human brain (1). Namely refers to using artificial methods and technologies to imitate, extend and expand human intelligence, to achieve some “machine thinking”. With 70 years of effort, AI has come to be widely used in many fields, such as health care, finance, education, and others. It has made certain operations more convenient and rational, especially in the medical industry.

In gastroenterological services, reviewing a large number of endoscopic images will lead to physicians’ overwork and indirectly affect the accuracy of diagnosis and the efficiency of decision making. To offload tedious work but target more comprehensive tasks, the need for AI-assisted tools in clinical practice is on the rise. Researchers have developed AI methods to segment lesions of interest in endoscopic images automatically. These are of value for the diagnosis, treatment, and prognosis of gastrointestinal diseases. At present, the application of AI in gastrointestinal diseases is still in the early stage, and the acquisition, cleaning and standardization of data are huge problems that limit the development of AI. Moreover, whether AI can be quickly applied to gastrointestinal diseases depends on the performance of intelligent system in clinical application, and also depends on the understanding and acceptance of AI by clinical medical staff.

In this review, we introduce the classification of AI techniques, and AI are reviewed from two aspects in the application of gastroenterology, one is the application of AI in the different types of endoscopes, the second is the application of AI in various gastrointestinal diseases. Finally, we discuss the challenges and future developmental direction of AI applications in gastrointestinal diseases.

We present the following article in accordance with the Narrative Review reporting checklist (available at https://dx.doi.org/10.21037/atm-21-3001).

Methods

We searched the PubMed electronic database for English literature published between 2000 to 2020. The search keywords containing “AI”, “machine learning (ML)”, “deep learning (DL)”, “convolutional neural network (CNN)”, “endoscopy”, “white light endoscopy (WLE)”, “narrow band imaging (NBI) endoscopy”, “magnifying endoscopy with narrow band imaging (ME-NBI)”, “chromoendoscopy”, “endocytoscopy (EC)”, and “capsule endoscopy (CE)”. The search results were manually reviewed to confirm studies involving AI applications in the gastrointestinal field.

AI

With the improvement of computers and the contributions from other disciplines, the field of AI has advanced remarkably, recently emerging as its own field. ML, one of the core topics in AI, was first proposed in the 1980s as a way to implement AI. Through continuous exploration and improvement, a new subbranch DL has grown from ML. DL has a more complex feature extraction process than ML.

ML

Over the last 40 years, ML has developed into a multidisciplinary and interdisciplinary field of study, involving statistics, probability theory, and other disciplines. ML is a type of automatic analysis that learns from data. Using multiple iterations, it continuously improves on the gaps in the existing knowledge system to improve the performance of the task at hand. According to learning methods, ML can be roughly divided into three types: supervised learning, unsupervised learning, and reinforcement learning. Supervised learning uses labeled data to train algorithms, unsupervised learning uses unlabeled data to discover new patterns, and reinforcement learning uses continuous self-optimization through the autonomous learning of the machine to gradually complete the target task. Unlike supervised learning and unsupervised learning, reinforcement learning does not require any data to be given in advance, and there is a balance between exploration and exploitation (2).

Various ML algorithms, including decision trees, support vector machines, and regression, have been used in medical research. A decision tree is a flowchart-like structure that is usually built to aid in decision making. Based on the decision tree algorithm, a preventive measure guide was developed, and has been proven considerably valuable in the protection and safety of health care workers (3). The support vector machine algorithm is adept at binary classification. Mori et al. built a computer-aided system (CAD) for real-time identification of diminutive polyps through the support vector machine algorithm. It could identify diminutive polyps as either tumor polyps or non-tumor polyps (4). Regression is generally used to identify the state relationship between variables, which has been advantageous for constructing a prediction model of preoperative lymph node metastasis of colon cancer (5).

DL

DL outperforms previous conventional ML in big data fitting due to its automatic data-driven operation, which contrasts specific preprocessing procedures. In addition, the basic ideas and technologies of DL used in different fields are easy to convert and amenable to later application. However, for a small volume of data, traditional ML has a higher capacity to achieve excellent performance. DL works based on neural networks with an algorithmic architecture of multiple hidden layers, each of which further refines the conclusions of the previous layer (6). Neural networks are typically trained using supervised or unsupervised learning methods, whereas a CNN uses the former and a generative adversarial network uses the latter.

Types of gastrointestinal endoscopy

AI-based endoscopy image analysis is one of the most promising applications in the medical field. An endoscope is an illuminated optical instrument used to examine the inner structures of the human body through natural orifices or surgical incisions and can determine the necessity of local biopsy or treatment. It mainly consists of a light source, a lens, and a pipe. Because of its minimal invasiveness, endoscopy has become an important diagnostic tool for early gastrointestinal neoplasms. There are six types of commonly used endoscopes (Figure 1): WLE, NBI endoscopy, ME-NBI, chromoendoscopy, EC, and CE.

WLE

WLE is the preferred endoscopic technique of screening for gastrointestinal diseases due to its low cost and rapidity of examination. However, it suffers from limited sensitivity to small precursor lesions.

Bossuyt et al. collected WLE images of 35 participants with ulcerative colitis and healthy controls to develop an AI system with a red density algorithm to reflect disease activity (8). This method automatically constructed a red density map of endoscopic images by extracting values of red-green-blue pixels through the red channel. It measured disease activity with the final disease activity score, which was closely related to the histological remission score (8).

Invasion depth is one of the important risk factors for lymph node metastasis of gastrointestinal tumors and affects therapy selection. A retrospective study, by Cho et al. established a supervised CNN model (combine Inception-ResNet-v2 and the DenseNet161 models) to categorize gastric neoplasms into a binary class using invasion depth (mucosa-confined versus submucosa-invaded), with the area under the curve (AUC) of 0.887 in both internal and external tests (9).

NBI endoscopy

Where WLE uses white light, NBI endoscopy reduces the range of the visible light spectrum through a wavelength filter, which retains the blue (415 nm) and green (540 nm) light only. As the kept wavelengths match with the hemoglobin absorption spectrum, NBI endoscopy enhances the clarity of microvascular morphology and mucosal surface structures. This assists in the diagnosis of the mucosal surface lesions, better defining the scope and boundaries of lesions (10,11).

Mori et al. prospectively developed a CAD-NBI model using ML algorithms to detect diminutive polyps and predict related pathologies (neoplastic polyps and nonneoplastic polyps) (4). The negative predictive values for the diminutive rectosigmoid adenomas in the worst and best cases were 95.20% and 96.50%, respectively. In terms of better performance, the CAD-NBI model proved more time efficient than those based on chromoendoscopy. The excellent performance of this model benefited from the observation scope of NBI for microstructures and capillaries of the mucosal epithelium, which is also a step towards realizing the automatic detection of pathology during endoscopy.

Adenomas is the precursors of most colorectal malignancies. Endoscopic resection of adenomas, contributes to the reduction of the incidence and mortality of colorectal cancer (12). Therefore, the detection and classification of polyps is crucial for treatment and prognosis. A recent study reported that diagnosis of NBI by DNN-CAD model was satisfactory (13). The authors analyzed 2,441 images and achieved an accuracy of 90.10%, a sensitivity of 96.30%, and a specificity of 78.10% in identifying neoplastic or proliferative polyps less than 5 mm in size.

ME-NBI

ME-NBI is a hybrid technique combining NBI and magnifying endoscopy, which enables one to observe the various details of the mucosal capillaries. However, there is still an appreciable rate of missed diagnoses.

One of the endoscopic characteristics of early squamous cell tumors is the presence of intrapapillary capillary loops, which is related to invasion depth (14). A supervised CNN system was developed to classify intrapapillary capillary loops into either normal or abnormal patterns by training 7,046 ME-NBI images of 17 patients, yielding an accuracy of 93.30% (15). Another CNN system with a GoogLeNet algorithm using 2,828 ME-NBI images was used to identify early gastric cancer and gastritis (16).

Chromoendoscopy

Chromoendoscopy introduces pigment dye into the mucosa under endoscopy to enhance color contrast between lesions and normal mucosa. The positive screening rate of chromoendoscopy is significantly higher than that of conventional endoscopy. In particular, some flat and concave lesions that are easily missed in conventional endoscopy (17).

To automatically detect the gastric cancer, Hirasawa et al. trained a CNN model with 13,584 images of gastric cancer and validated it in an independent testing set (2,296 stomach images) (18), yielding a sensitivity of 92.20% in diagnosing gastric cancer. Ikenoyama et al. compared the performance of a CNN model with that of endoscopists in detecting gastric cancer (19). The detection speed and performance of the CNN model proved superior to those of endoscopists.

EC

EC is a type of optical microscopic endoscopy, which can rapidly magnify objects 100 to 1,000 times. Combined with in vivo staining agents which increase cell contrast of the mucosa, the cell structure of the superficial cross-section of the digestive tract mucosa are observed in real time. EC is beneficial for diagnosis of the nature of lesions, improving its accuracy, and reducing the number of biopsies.

To distinguish between nonmalignant lesions and esophageal squamous cell carcinoma, Kumagai et al. mapped an AI model based on a GoogLeNet algorithm using 6,235 EC images (20) and achieved 90.90% accuracy, 92.60% sensitivity, and 89.30% specificity. However, EC images with optical magnification of ×400 and ×500 times were used in this study, which might have reduced the diagnostic performance of the AI model.

CE

CE involves a small capsule mainly consisting of a video camera, flash lamp, radio transmitter, and a battery. As the capsule endoscope is swallowed into the stomach and transported by gastrointestinal motility, the condition of the digestive tract is recorded. CE allows one to directly view the inner surface of the bowels if intestinal preparation is effective.

An AI model based on the Single Shot Multibox Detector algorithm was developed to detect small-bowel angioectasia using 12,725 CE images (21). This model had an AUC of 0.998, a sensitivity of 98.80%, a specificity of 98.40%, a positive predictive value of 75.40%, and a negative-positive value of 99.90%. CE images have also been used for the automatic identification of colon cancers and polyps with a CNN algorithm (22,23).

Application of AI in gastrointestinal diseases

According to common sites of gastrointestinal diseases, AI applications in gastroenterological endoscopy relate to three aspects: upper gastrointestinal diseases, small intestinal diseases, and large intestinal diseases.

Upper gastrointestinal diseases

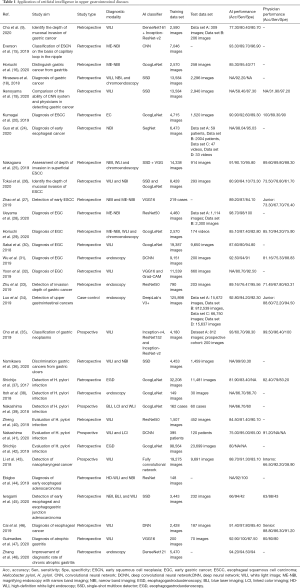

AI applications in endoscopy of upper digestive tract diseases are shown in Table 1 and include detection of esophageal and gastric cancer, prediction of the invasion depth of cancer, distinction of cancers from other diseases, and detection of Helicobacter pylori infection.

Full table

To enable early detection of esophageal squamous cell carcinoma, Guo et al. established a CAD system using SegNet architecture that was trained on 6,473 NBI images and validated with image and video data sets (24). The system showed an AUC of 0.989, a sensitivity of 98%, and a specificity of 95%. Comparatively, other CAD-based detection systems of esophageal squamous cell carcinoma had slightly inferior sensitivity due to the small data volume (15,20,25-27). Those studies carried out comparative experiments on the performance between physicians and intelligent systems, suggesting that the detection capacity of CAD systems can reach the level of a junior physician (26,27). In gastric cancer detection, a CNN model used 7,874 ME-NBI images from a single center for training and had an accuracy of 98.70%, a sensitivity of 98%, and a specificity of 100% (28). In a comparative study of CAD systems and physicians in detection of early gastric cancer, the CAD system with a GoogLeNet algorithm obtained an AUC of 0.868, an accuracy of 85.10%, a sensitivity of 87.40%, and a specificity of 82.80% (29). Sakai et al. used 29,037 images to detect early gastric cancer with an accuracy of 87.60% (30). Meanwhile, Wu et al. collected 9,151 images to train the deep CNN model for the detection of early gastric cancer, achieving an accuracy of 92.50% (31).

The invasion depth of cancer is crucial for selecting patients with gastric cancer for endoscopic resection. Many studies have detected the invasion depth of gastric cancer based on ML (9,18,25,26,32). Zhu et al. published a CNN-CAD system based on the ResNet50 algorithm to determine the invasion depth of gastric cancer. The AUC for the CNN-CAD system was 0.940, and the accuracy, sensitivity, and specificity were 89.16%, 76.47%, and 95.56%, respectively (33). The CNN-CAD system appears to be capable of outperforming endoscopists. Yoon et al. constructed a novel CNN diagnostic system based on the VGG16 algorithm, which had the highest performance (AUC =0.851) in determining the invasion depth of gastric cancer (32). Hirasawa et al. used the CNN system to identify the invasion depth and tumor size of gastric cancer (18). In addition, Luo et al. created the gastrointestinal AI diagnosis system (GRAIDS) based on DeepLab’s V3+ algorithm, a binary classification model for real-time detection of upper gastrointestinal tumors that was trained on 1,036,496 endoscopy images from six centers (34). The diagnostic accuracy of GRAIDS was 97.70% in the five external validation sets. Cho et al. established a five-category classification CNN model to identify neoplasm, early gastric cancer, low-grade dysplasia, high-grade dysplasia, and advanced gastric cancer (35). The CNN model was developed and validated using 5,017 WLE images based on the 5-fold-cross validation method. Two other aforementioned studies focused on distinguishing gastric cancer from gastritis (16) and gastric ulcers (36).

Helicobacter pylori infection is associated with the incidence of gastric cancer. Therefore, many studies have used ML algorithms to build models for the diagnosis of Helicobacter pylori infection, with the early models mostly using binary classification (37-40). A retrospective study used 179 images to create a model to detect Helicobacter pylori infection, which yielded an AUC of 0.956, a sensitivity of 86.70%, and a specificity of 86.70% (38). Other studies examined the ability of three-category methods to discriminate between uninfected, infected, and post-eradication (41,42).

Other diseases, including nasopharyngeal cancer (one study) (43), esophageal cancer (three studies) (44-46), and atrophic gastritis (two studies) (47,48) have been diagnosed using ML algorithms.

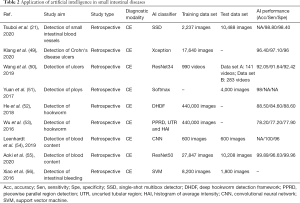

Small intestinal diseases

AI applications in small intestinal diseases are based on CE images or videos (Table 2). For ulcer detection, Klang et al. created a CNN model that could detect small-bowel ulcers in Crohn’s disease patients based on 17,640 images (49). The CNN model obtained an AUC of 0.990 in the randomly split images. To develop an easily transformable diagnostic model for ulcers, a retrospective study used 1,416 videos to develop and validate the model, which had favorable performance (AUC =0.973) (50). A CAD system was proposed to recognize polyps based on a stacked sparse autoencoder with the image manifold constraint method and yielded an accuracy of 98% (51). He et al. developed an AI system that could identify hookworm infection using 440K CE images (52,53); meanwhile, another study that used a CNN algorithm to detect angioectasia achieved a sensitivity of 100% and a specificity of 96% (54). AI has also been used for the detection of bleeding (55,56) and Crohn’s disease (49).

Full table

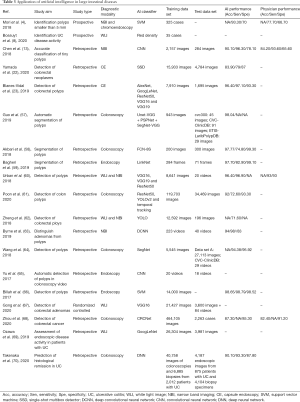

Large intestinal diseases

Table 3 summarizes the studies that have leveraged AI to assist in the diagnosis of large intestinal diseases, most of which focus on polyp detection, and related to identification, localization, and segmentation. Three studies of polyp segmentation showed high accuracy (57-59), while among the four studies of polyp localization (23,60-62), there has been great heterogeneity concerning data between training and test sets, subsequently leading to the variable performance of these models. Nevertheless, the accuracy of most models has been greater than 85% (13,63-66). A randomized controlled study constructed a system to improve the detection rate of adenoma (67). Furthermore, Zhou et al. developed a DL model for diagnosing colorectal cancer based on colonoscopy images of 14,442 patients (68), achieving an AUC of 0.990, 0.991 and 0.997 in three test sets at the image level. Finally, AI has been used extensively to assess disease activity in ulcerative colitis (8,69,70).

Full table

Challenges and future directions

Some factors may limit the development of AI systems in the diagnosis of gastrointestinal diseases. Due to the small sample size of current studies, the current models are prone to overfitting. The number of amplified samples can alleviate this phenomenon. Also, it is crucial to validate the accuracy of model in multiple external data sets. Specifically, multicenter, diagnostic studies are needed, while video data are critical for expediting model verification by simulating the clinical settings (34). Moreover, the previous studies have been limited in disease diversity, which weakens the ability to generalize the findings of the research. The included training data should thus have greater fidelity to real application scenarios, so that the AI models could be made more suited to the clinical transformation. Training with offset data has a considerable impact on the generalization and application of the model. In addition, prospective studies are needed to compare the differences across AI systems, physicians, and physicians aided by AI, which may clarify the clinical application of AI systems. Currently, model development relies largely on manual preprocessing and labeling, which is extremely time-consuming and hinders technique advancement.

AI has been applied to most gastrointestinal diseases, but esophageal polyps, esophageal lipoma, gastric cyst, and a few other diseases remain conspicuous exceptions. In addition, due to the difficulty of long-term follow-up, there are relatively few AI studies that have focused on the prognosis of disease. From the current research, AI models are regularly based on one type of image. However, with the improvement of technology, it is possible to create a cross-platform AI system that overcomes differences in image quality, manufacturer, and color. This will reduce the training burden and platform construction cost.

Conclusions

This brief overview of the status of AI’s application in gastrointestinal diseases provides potential value to solving clinical problems and to further utilizing AI in the future. AI is widely used in endoscopy, including in procedures involving the upper gastrointestinal tract, large intestine, and small bowel, and has been able to resolving several issues of missed and challenging diagnoses in clinical settings. Although AI may offer benefit to patients in the process of diagnosis and treatment, its use increases the complexity of operation to a certain extent. Hence, medical staff should work and be patient with AI during the early stages of AI utilization.

Acknowledgments

Funding: This work was supported by the National Natural Science Foundation of China (No. 81501462 and 81902861); the 1·3·5 Project for Disciplines of Excellence-Clinical Research Incubation Project, West China Hospital, Sichuan University; the Sichuan Science and Technology Program (No. 2019YJ0116); the Chengdu International Science and Technology Cooperation Fund (No. 2019-GH02-00074-HZ); and the Functional and Molecular Imaging Key Laboratory of Sichuan Province (No. 2012JO0011).

Footnote

Reporting Checklist: The authors have completed the Narrative Review reporting checklist. Available at https://dx.doi.org/10.21037/atm-21-3001

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://dx.doi.org/10.21037/atm-21-3001). BS serves as an unpaid Associate Editors-in-Chief of Annals of Translational Medicine from Sept 2020 to Aug 2021. The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Turing AM I.. Computing machinery and intelligence. Mind 1950;LIX:433-60. [Crossref]

- Sutton RS, Barto AG. Reinforcement learning: An introduction. Cambridge: The MIT Press, 1998.

- Forrester JD, Nassar AK, Maggio PM, et al. Precautions for operating room team members during the COVID-19 pandemic. J Am Coll Surg 2020;230:1098-101. [Crossref] [PubMed]

- Mori Y, Kudo SE, Misawa M, et al. Real-time use of artificial intelligence in identification of diminutive polyps during colonoscopy: A prospective study. Ann Intern Med 2018;169:357-66. [Crossref] [PubMed]

- Huang YQ, Liang CH, He L, et al. Development and validation of a radiomics nomogram for preoperative prediction of lymph node metastasis in colorectal cancer. J Clin Oncol 2016;34:2157-64. [Crossref] [PubMed]

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015;521:436-44. [Crossref] [PubMed]

- Nishiyama S, Oka S, Tanaka S, et al. Clinical usefulness of endocytoscopy in the remission stage of ulcerative colitis: A pilot study. J Gastroenterol 2015;50:1087-93. [Crossref] [PubMed]

- Bossuyt P, Nakase H, Vermeire S, et al. Automatic, computer-aided determination of endoscopic and histological inflammation in patients with mild to moderate ulcerative colitis based on red density. Gut 2020;69:1778-86. [Crossref] [PubMed]

- Cho BJ, Bang CS, Lee JJ, et al. Prediction of submucosal invasion for gastric neoplasms in endoscopic images using deep-learning. J Clin Med 2020;9:1858. [Crossref] [PubMed]

- Machida H, Sano Y, Hamamoto Y, et al. Narrow-band imaging in the diagnosis of colorectal mucosal lesions: A pilot study. Endoscopy 2004;36:1094-8. [Crossref] [PubMed]

- Gono K, Obi T, Yamaguchi M, et al. Appearance of enhanced tissue features in narrow-band endoscopic imaging. J Biomed Opt 2004;9:568-77. [Crossref] [PubMed]

- Winawer SJ, Zauber AG, Ho MN, et al. Prevention of colorectal cancer by colonoscopic polypectomy. The National Polyp Study Workgroup. N Engl J Med 1993;329:1977-81. [Crossref] [PubMed]

- Chen PJ, Lin MC, Lai MJ, et al. Accurate classification of diminutive colorectal polyps using computer-aided analysis. Gastroenterology 2018;154:568-75. [Crossref] [PubMed]

- Sato H, Inoue H, Ikeda H, et al. Utility of intrapapillary capillary loops seen on magnifying narrow-band imaging in estimating invasive depth of esophageal squamous cell carcinoma. Endoscopy 2015;47:122-8. [Crossref] [PubMed]

- Everson M, Herrera L, Li W, et al. Artificial intelligence for the real-time classification of intrapapillary capillary loop patterns in the endoscopic diagnosis of early oesophageal squamous cell carcinoma: A proof-of-concept study. United European Gastroenterol J 2019;7:297-306. [Crossref] [PubMed]

- Horiuchi Y, Aoyama K, Tokai Y, et al. Convolutional neural network for differentiating gastric cancer from gastritis using magnified endoscopy with narrow band imaging. Dig Dis Sci 2020;65:1355-63. [Crossref] [PubMed]

- Thorlacius H, Toth E. Role of chromoendoscopy in colon cancer surveillance in inflammatory bowel disease. Inflamm Bowel Dis 2007;13:911-7. [Crossref] [PubMed]

- Hirasawa T, Aoyama K, Tanimoto T, et al. Application of artificial intelligence using a convolutional neural network for detecting gastric cancer in endoscopic images. Gastric Cancer 2018;21:653-60. [Crossref] [PubMed]

- Ikenoyama Y, Hirasawa T, Ishioka M, et al. Detecting early gastric cancer: Comparison between the diagnostic ability of convolutional neural networks and endoscopists. Dig Endosc 2021;33:141-50. [Crossref] [PubMed]

- Kumagai Y, Takubo K, Kawada K, et al. Diagnosis using deep-learning artificial intelligence based on the endocytoscopic observation of the esophagus. Esophagus 2019;16:180-7. [Crossref] [PubMed]

- Tsuboi A, Oka S, Aoyama K, et al. Artificial intelligence using a convolutional neural network for automatic detection of small-bowel angioectasia in capsule endoscopy images. Dig Endosc 2020;32:382-90. [Crossref] [PubMed]

- Yamada A, Niikura R, Otani K, et al. Automatic detection of colorectal neoplasia in wireless colon capsule endoscopic images using a deep convolutional neural network. Endoscopy 2020; Epub ahead of print. [Crossref] [PubMed]

- Blanes-Vidal V, Baatrup G, Nadimi ES. Addressing priority challenges in the detection and assessment of colorectal polyps from capsule endoscopy and colonoscopy in colorectal cancer screening using machine learning. Acta Oncol 2019;58:S29-36. [Crossref] [PubMed]

- Guo L, Xiao X, Wu C, et al. Real-time automated diagnosis of precancerous lesions and early esophageal squamous cell carcinoma using a deep learning model (with videos). Gastrointest Endosc 2020;91:41-51. [Crossref] [PubMed]

- Nakagawa K, Ishihara R, Aoyama K, et al. Classification for invasion depth of esophageal squamous cell carcinoma using a deep neural network compared with experienced endoscopists. Gastrointest Endosc 2019;90:407-14. [Crossref] [PubMed]

- Tokai Y, Yoshio T, Aoyama K, et al. Application of artificial intelligence using convolutional neural networks in determining the invasion depth of esophageal squamous cell carcinoma. Esophagus 2020;17:250-6. [Crossref] [PubMed]

- Zhao YY, Xue DX, Wang YL, et al. Computer-assisted diagnosis of early esophageal squamous cell carcinoma using narrow-band imaging magnifying endoscopy. Endoscopy 2019;51:333-41. [Crossref] [PubMed]

- Ueyama H, Kato Y, Akazawa Y, et al. Application of artificial intelligence using a convolutional neural network for diagnosis of early gastric cancer based on magnifying endoscopy with narrow-band imaging. J Gastroenterol Hepatol 2021;36:482-9. [Crossref] [PubMed]

- Horiuchi Y, Hirasawa T, Ishizuka N, et al. Performance of a computer-aided diagnosis system in diagnosing early gastric cancer using magnifying endoscopy videos with narrow-band imaging (with videos). Gastrointest Endosc 2020;92:856-865.e1. [Crossref] [PubMed]

- Sakai Y, Takemoto S, Hori K, et al. Automatic detection of early gastric cancer in endoscopic images using a transferring convolutional neural network. Annu Int Conf IEEE Eng Med Biol Soc 2018;2018:4138-41. [Crossref] [PubMed]

- Wu L, Zhou W, Wan X, et al. A deep neural network improves endoscopic detection of early gastric cancer without blind spots. Endoscopy 2019;51:522-31. [Crossref] [PubMed]

- Yoon HJ, Kim S, Kim JH, et al. A lesion-based convolutional neural network improves endoscopic detection and depth prediction of early gastric cancer. J Clin Med 2019;8:1310. [Crossref] [PubMed]

- Zhu Y, Wang QC, Xu MD, et al. Application of convolutional neural network in the diagnosis of the invasion depth of gastric cancer based on conventional endoscopy. Gastrointest Endosc 2019;89:806-815.e1. [Crossref] [PubMed]

- Luo H, Xu G, Li C, et al. Real-time artificial intelligence for detection of upper gastrointestinal cancer by endoscopy: A multicentre, case-control, diagnostic study. Lancet Oncol 2019;20:1645-54. [Crossref] [PubMed]

- Cho BJ, Bang CS, Park SW, et al. Automated classification of gastric neoplasms in endoscopic images using a convolutional neural network. Endoscopy 2019;51:1121-9. [Crossref] [PubMed]

- Namikawa K, Hirasawa T, Nakano K, et al. Artificial intelligence-based diagnostic system classifying gastric cancers and ulcers: Comparison between the original and newly developed systems. Endoscopy 2020;52:1077-83. [Crossref] [PubMed]

- Shichijo S, Nomura S, Aoyama K, et al. Application of convolutional neural networks in the diagnosis of Helicobacter pylori infection based on endoscopic images. EBioMedicine 2017;25:106-11. [Crossref] [PubMed]

- Itoh T, Kawahira H, Nakashima H, et al. Deep learning analyzes Helicobacter pylori infection by upper gastrointestinal endoscopy images. Endosc Int Open 2018;6:E139-44. [Crossref] [PubMed]

- Nakashima H, Kawahira H, Kawachi H, et al. Artificial intelligence diagnosis of Helicobacter pylori infection using blue laser imaging-bright and linked color imaging: A single-center prospective study. Ann Gastroenterol 2018;31:462-8. [Crossref] [PubMed]

- Zheng W, Zhang X, Kim JJ, et al. High accuracy of convolutional neural network for evaluation of Helicobacter pylori infection based on endoscopic images: Preliminary experience. Clin Transl Gastroenterol 2019;10:e00109 [Crossref] [PubMed]

- Nakashima H, Kawahira H, Kawachi H, et al. Endoscopic three-categorical diagnosis of Helicobacter pylori infection using linked color imaging and deep learning: A single-center prospective study (with video). Gastric Cancer 2020;23:1033-40. [Crossref] [PubMed]

- Shichijo S, Endo Y, Aoyama K, et al. Application of convolutional neural networks for evaluating Helicobacter pylori infection status on the basis of endoscopic images. Scand J Gastroenterol 2019;54:158-63. [Crossref] [PubMed]

- Li C, Jing B, Ke L, et al. Development and validation of an endoscopic images-based deep learning model for detection with nasopharyngeal malignancies. Cancer Commun (Lond) 2018;38:59. [Crossref] [PubMed]

- Ebigbo A, Mendel R, Probst A, et al. Computer-aided diagnosis using deep learning in the evaluation of early oesophageal adenocarcinoma. Gut 2019;68:1143-5. [Crossref] [PubMed]

- Iwagami H, Ishihara R, Aoyama K, et al. Artificial intelligence for the detection of esophageal and esophagogastric junctional adenocarcinoma. J Gastroenterol Hepatol 2021;36:131-6. [Crossref] [PubMed]

- Cai SL, Li B, Tan WM, et al. Using a deep learning system in endoscopy for screening of early esophageal squamous cell carcinoma (with video). Gastrointest Endosc 2019;90:745-53.e2. [Crossref] [PubMed]

- Guimarães P, Keller A, Fehlmann T, et al. Deep-learning based detection of gastric precancerous conditions. Gut 2020;69:4-6. [Crossref] [PubMed]

- Zhang Y, Li F, Yuan F, et al. Diagnosing chronic atrophic gastritis by gastroscopy using artificial intelligence. Dig Liver Dis 2020;52:566-72. [Crossref] [PubMed]

- Klang E, Barash Y, Margalit RY, et al. Deep learning algorithms for automated detection of Crohn's disease ulcers by video capsule endoscopy. Gastrointest Endosc 2020;91:606-613.e2. [Crossref] [PubMed]

- Wang S, Xing Y, Zhang L, et al. Deep convolutional neural network for ulcer recognition in wireless capsule endoscopy: Experimental feasibility and optimization. Comput Math Methods Med 2019;2019:7546215 [Crossref] [PubMed]

- Yuan Y, Meng MQ. Deep learning for polyp recognition in wireless capsule endoscopy images. Med Phys 2017;44:1379-89. [Crossref] [PubMed]

- He JY, Wu X, Jiang YG, et al. Hookworm detection in wireless capsule endoscopy images with deep learning. IEEE Trans Image Process 2018;27:2379-92. [Crossref] [PubMed]

- Wu X, Chen H, Gan T, et al. Automatic hookworm detection in wireless capsule endoscopy images. IEEE Trans Med Imaging 2016;35:1741-52. [Crossref] [PubMed]

- Leenhardt R, Vasseur P, Li C, et al. A neural network algorithm for detection of GI angiectasia during small-bowel capsule endoscopy. Gastrointest Endosc 2019;89:189-94. [Crossref] [PubMed]

- Aoki T, Yamada A, Kato Y, et al. Automatic detection of blood content in capsule endoscopy images based on a deep convolutional neural network. J Gastroenterol Hepatol 2020;35:1196-200. [Crossref] [PubMed]

- Xiao J, Meng MQ. A deep convolutional neural network for bleeding detection in wireless capsule endoscopy images. Annu Int Conf IEEE Eng Med Biol Soc 2016;2016:639-42. [PubMed]

- Guo X, Zhang N, Guo J, et al. Automated polyp segmentation for colonoscopy images: A method based on convolutional neural networks and ensemble learning. Med Phys 2019;46:5666-76. [Crossref] [PubMed]

- Akbari M, Mohrekesh M, Nasr-Esfahani E, et al. Polyp segmentation in colonoscopy images using fully convolutional network. Annu Int Conf IEEE Eng Med Biol Soc 2018;2018:69-72. [Crossref] [PubMed]

- Bagheri M, Mohrekesh M, Tehrani M, et al. Deep neural network based polyp segmentation in colonoscopy images using a combination of color spaces. Annu Int Conf IEEE Eng Med Biol Soc 2019;2019:6742-5. [Crossref] [PubMed]

- Urban G, Tripathi P, Alkayali T, et al. Deep learning localizes and identifies polyps in real time with 96% accuracy in screening colonoscopy. Gastroenterology 2018;155:1069-1078.e8. [Crossref] [PubMed]

- Poon CCY, Jiang Y, Zhang R, et al. AI-doscopist: a real-time deep-learning-based algorithm for localising polyps in colonoscopy videos with edge computing devices. NPJ Digit Med 2020;3:73. [Crossref] [PubMed]

- Zheng Y, Zhang R, Yu R, et al. Localisation of colorectal polyps by convolutional neural network features learnt from white light and narrow band endoscopic images of multiple databases. Annu Int Conf IEEE Eng Med Biol Soc 2018;2018:4142-5. [Crossref] [PubMed]

- Byrne MF, Chapados N, Soudan F, et al. Real-time differentiation of adenomatous and hyperplastic diminutive colorectal polyps during analysis of unaltered videos of standard colonoscopy using a deep learning model. Gut 2019;68:94-100. [Crossref] [PubMed]

- Wang P, Xiao X, Glissen Brown JR, et al. Development and validation of a deep-learning algorithm for the detection of polyps during colonoscopy. Nat Biomed Eng 2018;2:741-8. [Crossref] [PubMed]

- Yu L, Chen H, Dou Q, et al. Integrating online and offline three-dimensional deep learning for automated polyp detection in colonoscopy videos. IEEE J Biomed Health Inform 2017;21:65-75. [Crossref] [PubMed]

- Billah M, Waheed S, Rahman MM. An automatic gastrointestinal polyp detection system in video endoscopy using fusion of color wavelet and convolutional neural network features. Int J Biomed Imaging 2017;2017:9545920 [Crossref] [PubMed]

- Gong D, Wu L, Zhang J, et al. Detection of colorectal adenomas with a real-time computer-aided system (ENDOANGEL): A randomised controlled study. Lancet Gastroenterol Hepatol 2020;5:352-61. [Crossref] [PubMed]

- Zhou D, Tian F, Tian X, et al. Diagnostic evaluation of a deep learning model for optical diagnosis of colorectal cancer. Nat Commun 2020;11:2961. [Crossref] [PubMed]

- Ozawa T, Ishihara S, Fujishiro M, et al. Novel computer-assisted diagnosis system for endoscopic disease activity in patients with ulcerative colitis. Gastrointest Endosc 2019;89:416-421.e1. [Crossref] [PubMed]

- Takenaka K, Ohtsuka K, Fujii T, et al. Development and validation of a deep neural network for accurate evaluation of endoscopic images from patients with ulcerative colitis. Gastroenterology 2020;158:2150-7. [Crossref] [PubMed]