Automatic identification of myopia based on ocular appearance images using deep learning

Introduction

Refractive status plays a significant role in the development of photoreceptors and visual function (1,2). Myopia, impaired refractive ability, has become a public health concern affecting nearly 130 million people worldwide, and its prevalence is increasing at an alarming rate among school-age children globally, especially in China (3). All myopia should be corrected to avoid amblyopia and pathological myopia (4). However, most children do not notice their visual impairment, especially if only one eye is involved (5). Currently, the most widely used method for myopia screening is the uncorrected visual acuity test, which requires an acuity chart and trained nurses or technicians, possessing a sensitivity and specificity of 63.6% and 94.0%, respectively (6,7). Timely and annual manual optometric examinations of the entire at-risk population of individuals would help prevent the development of myopia. However, these screening examinations are expensive and time-consuming, making them an inefficient means of screening a large at-risk population (8). Therefore, a substantial number of patients, especially children with early-stage myopia and monocular myopia, may remain unnoticed and uncorrected, resulting in uncontrolled children’s myopia progression and substantial global costs (9).

Refractive ability is associated with anatomical structures of the axial and refractive medium, such as the cornea and lens (10,11). Because of environmental influences, most eyeballs will experience axial elongation and changes in the structure or location of the cornea and lens, resulting in myopia (12). Structural changes in the eyeball often accompany alterations in ocular appearance, such as strabismus, abnormal head position, longer axial, steeper central corneal curvatures (13-17). However, the ocular appearance of myopia children is not clinically observable before high myopia occurs; therefore, it is clinically challenging to diagnose myopia by ocular appearance (17). Facial recognition with deep convolution neural networks (DCNNs) has the potential to extract subtle features as well as hidden patterns, such as identifying facial phenotypes of genetic disorders and detecting sexual orientation (18,19). Therefore, DCNNs have great potential and may even be superior to ophthalmologists in diagnosing refractive function based on ocular appearance image features.

Recently, deep learning has been applied to many research areas in computer vision, the discipline that enables computers to understand images (20), including diagnosis of structural ocular disorders, such as diabetic retinopathy, glaucoma, and cataract (21-23). However, the effectiveness of computer vision techniques in diagnosing diseases with detectable functional changes and insignificant morphological changes remains to be studied, such as intestinal functional diseases, mental disorders, and behavioral developmental disorders. Deep learning models such as DCNNs are the primary candidates for most visual recognition tasks, such as fine-grained recognition to automatically discriminate categories with only small subtle visual differences (24); thus, these models have great potential to be applied for the diagnosis of these kinds of diseases. Recently, Heather Cody Hazlett’s group applied a DCNN to predict autism based on brain MRI, with a sensitivity of 88% and a specificity of 95% (25). Here, we aimed to automatically detect myopia using a DNCC based on real-world 2-D ocular appearance images that can be readily obtained in daily life.

Methods

Data source

Our research data were derived from the Myopia Artificial Intelligence (AI) Program in China after informed consent, name, sex, and age were collected from children aged 6–18 in China. Medical histories were obtained to exclude common diseases that can affect refractive status, such as diabetes and microphthalmia. Using a VX120 (Visionix Luneau, Jerusalem, Israel), noncycloplegic examinations were performed to measure eye refraction (26). Spherical lens values and column lens values were recorded. To extend the applicability of the deep learning system (DLS), different cameras including EVA-AL00, Canon EOS 6D, iPhone 6, iPhone 7 Plus and Le X620, were used for ocular appearance image collection according to the following standards. Without glasses, children were directed to look straight ahead, and three pictures from different angles of each eye were collected from a distance of 1 m from the examiners; these included pictures taken from the side, at a 45-degree front angle, and from the front under room illuminance ranging from 300 to 500 lx.

Data preparation

We extracted data from the Myopia AI Program for deep learning. To focus on the school-aged population, we included only individuals aged 6 to 18 in the analysis. After excluding blurred images, 7,050 pictures from 2,350 different eyes were finally included in our study, which contained 1,057 myopic eyes and 1,293 nonmyopic (emmetropic and hyperopic) eyes.

Three-angle pictures of one specific eye were stitched into one ocular appearance image. From top to bottom, an ocular appearance image consists of pictures from the side, 45-degree angle front, and front. Therefore, 7,170 pictures were processed into 2,350 ocular appearance images. Spherical equivalent refraction (SER) [the algebraic sum in diopters (D), sphere + 1/2 cylinder] was used to determine the refractive status of each ocular appearance image. The presence of myopia was defined as an SER ≤−0.5 diopter, according to the international definition of myopia (10).

Ethics statement

This study adhered to the tenets of the Declaration of Helsinki, and approval for the study protocol was obtained from the Institutional Review Board/Ethics Committee of Sun Yat-sen University (Guangzhou, China). Only partial faces (from the forehead to the nose) were contained in the ocular appearance images. All datasets used in the study were deidentified before transfer to the study investigators.

Model development

We resized the 2,350 ocular appearance images to 224×224 pixels and extracted facial features using a widely employed DCNN, VGG-Face with transfer learning (27). VGG-Face was originally pretrained using 2.6 million images to detect appearance features (28). The training dataset was randomly shuffled into training (75%) and validation (25%) datasets. Each eye was randomly assigned to the training or validation dataset. The VGG-Face was then fine-tuned for the myopia classification task. The training was performed using a Keras deep learning library with a TensorFlow GPU backend (Keras version 2.2.4, TensorFlow version 1.11.0) (29). The convolutional layers and batch normalization layers were unchanged. Each image was randomly augmented by cropping and zooming. To ensure that the important image features were retained, only the outer most 5% of the image, the background area without the eyes, were cropped. The dropout rate was empirically set to 0.5. Iteration was set at 250. We applied the adaptive moment estimation (ADAM) optimizer with an initial learning rate of 0.000001, a beta 1 of 0.9, a beta 2 of 0.999, and a fuzz factor of 1e−7 with zero decay (30). Finally, the loss was 0.3587. A diagram of the deep learning architecture is shown in Figure 1. The machine made a diagnosis in one eye based on the ocular appearance image of one eye from three directions. Thus, the machine diagnoses only once per image. However, the machine also makes use of the information from the front picture of the other eye for the diagnosis.

After a comparison with SER, we evaluated the performance of the DLS using the area under the curve (AUC), sensitivity (percentage of correctly predicted positive cohort cases from all positive cohort cases) and specificity (percentage of correctly predicted negative cohort cases from all negative cohort cases). The AUC and receiver operating characteristic (ROC) curve assessments were performed to evaluate the performance of our DLS.

Diagnosis visualization

To visualize the areas that contributed to the diagnosis made by the DLS, saliency maps and gradient class activation maps (grad-CAMs) were generated for each image in the dataset (31). On the saliency maps, pixel-level gradient of our model’s prediction with respect to each pixel in the input image was calculated. Image pixels that had a high impact on the model’s prediction were highlighted. In grad-CAM, instead of using the model output, the penultimate (pre-fully connected layer, usually the last convolution layer) convolution layer output was used to preserve any spatial information that was lost in the fully connected layer. The warmest color was used to represent the region of interest that contributed most to the DLS.

Clinical assessment

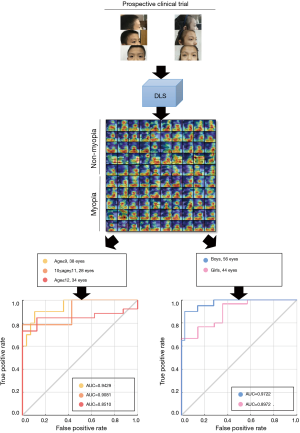

To assess the capability of the system in a real-world setting, we designed a prospective clinical trial test to compare the performance between our system and ophthalmologists (NCT04014725). The ophthalmologists were China Board-certified physicians who specialized in the clinical care of patients with ocular disorders. Fifty students (100 eyes) aged 6–18 years old were recruited for this study. The sample size of this study was calculated based on the sample sizes of previous studies (23,32). After ocular appearance images were obtained, all students were evaluated by the diagnostic system and three clinical ophthalmologists before optometric examinations. To minimize physician bias associated with prior knowledge of the children’s medical history, the physicians reviewed the same images of the children’s ocular appearance as the DLS. The status of each eye was judged as “myopia” or “nonmyopia”. Finally, the diagnoses of the system and ophthalmologists were recorded and compared.

To assess the parents’ ability to detect myopia in children, we asked the parents of three myopic students who did not have a medical education background to screen for myopia using the same 100 ocular appearance images. The diagnoses of the non-ophthalmologists were recorded and compared with those of the DLS and ophthalmologists.

Subgroup analysis

Since the prevalence of myopia was associated with age and sex (33), we performed subgroup analysis to investigate the influencing factors of DLS performance (34). Using the external validation dataset, AUCs were investigated in different age (≤10 vs. ≥11 years) and sex (male vs. female) subgroups.

Results

Internal validation and diagnosis visualization

In the validation test, our DLS achieved an AUC of 0.9270 (95% CI, 0.8580–0.9610), sensitivity of 81.13% (95% CI, 76.86–85.39%), and specificity of 86.42% (95% CI, 82.30–90.54%). The ROC curve is shown in Figure 2. Grad-CAMs and Saliency maps investigated the areas of the face applied by the classifier and showed that the classifier focused on the eyes, especially the temporal sclera, and did not rely on the background (Figure 2).

Clinical performance

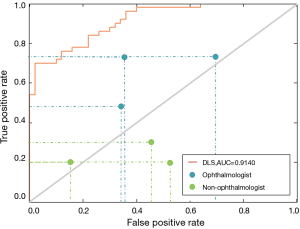

In the perspective clinical trial, 50 students (100 eyes, 56% male) with an average age of 10.06 were finally included in our study. Figure 3 shows the prediction performance of the DLS compared to that of each ophthalmologist, both with reference to the optometric examination, using ocular appearance images from the students. The AUC of the DLS was 0.9140. For myopia detection, the DLS had a higher sensitivity (84.00%; 95% CI, 73.50–94.50%) and specificity (74.00%; 95% CI, 61.40–86.60%) than ophthalmologists (sensitivity: 64.00%, 95% CI, 48.00–72.00%; specificity: 53.33%; 95% CI, 30.00–66.00%). In addition, the prediction performance of parents was worse than that of the DLS and ophthalmologists (Figure 3), with a sensitivity of 23.33% (95% CI, 20.00–30.00%) and a specificity of 62.00% (95% CI, 48.00–84.00%).

Subgroup analysis

The prevalence of myopia is associated with age and sex. It has been reported that girls and older children are more likely to have a worse SER and higher incidence of myopia (33,35). Therefore, we further performed subgroup analysis according to age and sex to evaluate the predictive performance of our DLS (Figure 4). For the subgroup analysis stratified by age, the AUC, sensitivity, and specificity of DLS were 0.8980, 68.75% and 86.84% for age ≤10 years; and 0.8995, 91.8% and 33.33% for age ≥11 years. For the subgroup analysis stratified by sex, the AUC, sensitivity, and specificity of DLS were 0.8976, 76.67% and 71.43% for females and 0.9722, 95.00% and 75.00% for males. The DLS achieved comparable AUCs for children of different sexes and ages.

Discussion

Our data suggest that DLS can detect myopia through subtle morphological features. Myopia is a chronic disease that is generally diagnosed and classified by functional examinations, such as visual acuity, optometric examination, microperimetry, and electrophysiologic assessments (10). In suspected pathological myopia, the morphological changes in the retina and choroid can be further explored using ocular coherence tomography (OCT), retinal autofluorescence (AF), fluorescein angiography (FA), and indocyanine green angiography (ICG) (10). In our study, a comparison of diagnostic accuracy between ophthalmologists and parents revealed that parents were less likely to detect myopia through ocular appearance. Ophthalmologists show better performance in the detection of myopia by identifying ocular appearance features, such as slightly protruding eyes, which are recognizable to the human eye according to their clinical experience (15-17). However, the DLS shows the best performance by distinguishing the nonquantifiable morphological features of myopia. In addition, since the knowledge, experience, and impression of the signs and symptoms vary among different individuals, human performance shows large variations. However, the DLS outperforms humans in making inferences from images and extracting generic descriptors.

The Grad-CAMs and Saliency maps reveal how DLS makes the diagnostic decisions and shows that the temporal sclera is of greatest importance in detecting myopia. The sclera presents the eyelid fissure of the eyes, reflecting lateral protrusion. Sclera can also reflect information about strabismus. The temporal sclera contributes more than the nasal sclera does, likely because we used images from three angles for our analysis, and side pictures can only provide information pertaining to the nasal sclera. During the identification of the region of interest, the cornea area occasionally did not present a clear point of interest. This is likely because the cornea is transparent, so it does not contribute to the diagnosis of myopia because it is not recognizable in the images.

Given the performance of this DLS and high prevalence of uncorrected myopia worldwide (36-38), this technology requires only photos obtained from regular cameras, such as smartphones, and has significant implications as a widely accessible screening tool for the general population. Compared with a visual acuity test, our DLS shows higher sensitivity (84%) in screening myopia in the clinical trial, providing a new myopia screening method. Optimizing sensitivity is of paramount importance for a screening tool, because it indicates the tool’s ability to correctly identify a myopia case even if this negatively impacts specificity (39). Spot Vision Screener (Welch Allyn Inc., Skaneateles Falls, NY, USA), an automatic noncycloplegic photo screener, has both high specificity (98.55%) and sensitivity (84.61%) for myopia detection (40). However, Spot Vision Screener requires specialized equipment and facilities that are not affordable in underdeveloped regions. Our DLS requires only children or their parents to obtain an ocular appearance image at home and does not require trained nurses or technicians; therefore, the savings of regular healthcare cost could be significant.

Notably, our study has the following advantages. First, most medical AI systems use diagnoses obtained from senior doctors as the ground truth to grade a dataset. However, our system uses the result of optometry as a gold standard, making our results more objective and reliable. Second, the DLS achieved comparable AUCs for children of different sexes and ages, indicating that the system has a wide applicability among different age groups and sexes.

Several limitations should be considered when interpreting the findings of this study. First, although the AUC remained high across different sex and age groups, the sensitivity and specificity had large variations. Future research with larger sample size and more balanced sample sizes across different ages and sexes is needed to enhance the performance in the subgroups. Second, the DLS has not been evaluated for its diagnostic performance in multiethnic populations to validate its robustness as a diagnostic tool in different ethnic groups. Third, although the DLS was able to detect myopia, it could not provide quantitative measurements of diopters. Moreover, improving the performance of the classification task for myopia will be addressed in the form of a future comprehensive analysis of the students’ ages, their parental history and potential environmental factors.

Conclusions

In conclusion, we present a DLS that can be used for myopia detection based on ocular appearance images. This DLS learned the ocular appearance features of myopia from a dataset derived from the Myopia AI Program and had high diagnostic accuracy; hence, it could potentially be applied for routine myopia screening.

Acknowledgments

Funding: The principal investigator of this study (H Lin) is currently supported by the National Key R & D Project (2018YFC0116500), Fundamental Research Funds of Innovation and Development Project for Outstanding Graduate Students in Sun Yat-sen University (19ykyjs37), the Key Research Plan for the National Natural Science Foundation of China Cultivation Project (91846109), the National Natural Science Foundation of China (81770967), the Fundamental Research Funds for the Central Universities (2018B010109008), and the National Natural Science Fund for Distinguished Young Scholars (81822010).

Footnote

Provenance and Peer Review: This article was commissioned by the Guest Editors (Haotian Lin and Limin Yu) for the series “Medical Artificial Intelligent Research” published in Annals of Translational Medicine. The article was sent for external peer review organized by the Guest Editors and the editorial office.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/atm.2019.12.39). The series “Medical Artificial Intelligent Research” was commissioned by the editorial office without any funding or sponsorship. HL served as the unpaid Guest Editor of the series. The other authors have no other conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was approved by the Institutional Review Board/Ethics Committee of Sun Yat-sen University (Guangzhou, China).

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Holmes JM, Clarke MP. Amblyopia. Lancet 2006;367:1343-51. [Crossref] [PubMed]

- Morgan IG, Ohno-Matsui K, Saw SM. Myopia. Lancet 2012;379:1739-48. [Crossref] [PubMed]

- Holden BA, Fricke TR, Wilson DA, et al. Global Prevalence of Myopia and High Myopia and Temporal Trends from 2000 through 2050. Ophthalmology 2016;123:1036-42. [Crossref] [PubMed]

- Amblyopia Campos E. Survey Ophthalmol 1995;40:23-39. [Crossref]

- Adashi EY, Geiger HJ, Fine MD. Health care reform and primary care--the growing importance of the community health center. N Engl J Med 2010;362:2047-50. [Crossref] [PubMed]

- Ma Y, He X, Zou H, et al. Myopia screening: combining visual acuity and noncycloplegic autorefraction. Optom Vis Sci 2013;90:1479-85. [Crossref] [PubMed]

- Bodack MI, Chung I, Krumholtz I. An analysis of vision screening data from New York City public schools. Optometry 2010;81:476-84. [Crossref] [PubMed]

- Gupta M, Gupta BP, Chauhan A, et al. Ocular morbidity prevalence among school children in Shimla, Himachal, North India. Indian J Ophthalmol 2009;57:133. [Crossref] [PubMed]

- Smith TS, Frick KD, Holden BA, et al. Potential lost productivity resulting from the global burden of uncorrected refractive error. Bull World Health Organ 2009;87:431-7. [Crossref] [PubMed]

- Flitcroft DI, He M, Jonas JB, et al. IMI–Defining and classifying myopia: a proposed set of standards for clinical and epidemiologic studies. Invest Ophthalmol Vis Sci 2019;60:M20-30. [Crossref] [PubMed]

- Mutti DO, Hayes JR, Mitchell GL, et al. Refractive error, axial length, and relative peripheral refractive error before and after the onset of myopia. Invest Ophthalmol Vis Sci 2007;48:2510-9. [Crossref] [PubMed]

- Wolffsohn JS, Flitcroft DI, Gifford KL, et al. IMI-Myopia Control Reports Overview and Introduction. Invest Ophthalmol Vis Sci 2019;60:M1-19. [Crossref] [PubMed]

- Rosenblatt RA, Andrilla CH, Curtin T, et al. Shortages of medical personnel at community health centers: implications for planned expansion. JAMA 2006;295:1042-9. [Crossref] [PubMed]

- Chiarcos C, Nordhoff S, Hellmann S. Linked Data in Linguistics: Springer, 2012.

- Atchison DA, Jones CE, Schmid KL, et al. Eye shape in emmetropia and myopia. Invest Ophthalmol Vis Sci 2004;45:3380-6. [Crossref] [PubMed]

- Carney LG, Mainstone JC, Henderson BA. Corneal topography and myopia. A cross-sectional study. Invest Ophthalmol Vis Sci 1997;38:311-20. [PubMed]

- Li F. Chinese Ophthalmology. Beijing: People's Medical Publishing House, 2005;2742-51.

- Gurovich Y, Hanani Y, Bar O, et al. Identifying facial phenotypes of genetic disorders using deep learning. Nat Med 2019;25:60. [Crossref] [PubMed]

- Wang Y, Kosinski M. Deep neural networks are more accurate than humans at detecting sexual orientation from facial images. J Pers Soc Psychol 2018;114:246. [Crossref] [PubMed]

- Forsyth DA, Ponce J. Computer vision: a modern approach: Prentice Hall Professional Technical Reference, 2002.

- Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016;316:2402-10. [Crossref] [PubMed]

- Li Z, He Y, Keel S, et al. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology 2018;125:1199-206. [Crossref] [PubMed]

- Long E, Lin H, Liu Z, et al. An artificial intelligence platform for the multihospital collaborative management of congenital cataracts. Nat Biomedical Engineering 2017;1:0024.

- Sharif Razavian A, Azizpour H, Sullivan J, et al. CNN features off-the-shelf: an astounding baseline for recognition. Proceedings of the IEEE conference on computer vision and pattern recognition workshops; 2014.

- Hazlett HC, Gu H, Munsell BC, et al. Early brain development in infants at high risk for autism spectrum disorder. Nature 2017;542:348. [Crossref] [PubMed]

- Sanchez I, Ortiz-Toquero S, Martin R. Intrasession Repeatability and Intersession Reproducibility Measurements Using VX120 Multidiagnostic Unit. Eye Contact Lens 2018;44 Suppl 2:S266-72. [Crossref] [PubMed]

- Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans Knowl Data Eng 2009;22:1345-59. [Crossref]

- Parkhi OM, Vedaldi A, Zisserman A. editors. Deep face recognition. BMVC, 2015.

- Chollet FK. GitHub. Available online: https://github.com/fchollet/keras. 2015.

- Kingma DP, Ba J. Adam: A method for stochastic optimization. arXiv preprint arXiv:14126980 2014.

- Simonyan K, Vedaldi A, Zisserman A. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:13126034 2013.

- Mannino RG, Myers DR, Tyburski EA, et al. Smartphone app for non-invasive detection of anemia using only patient-sourced photos. Nat Commun 2018;9:4924. [Crossref] [PubMed]

- Zhao J, Mao J, Luo R, et al. The progression of refractive error in school-age children: Shunyi district, China1. Am J Ophthalmol 2002;134:735-43. [Crossref] [PubMed]

- You QS, Wu LJ, Duan JL, et al. Factors associated with myopia in school children in China: the Beijing childhood eye study. PLoS One 2012;7:e52668. [Crossref] [PubMed]

- Wang SK, Guo Y, Liao C, et al. Incidence of and factors associated with myopia and high myopia in Chinese children, based on refraction without Cycloplegia. Jama Ophthalmol 2018;136:1017-24. [Crossref] [PubMed]

- Bourne RR, Stevens GA, White RA, et al. Causes of vision loss worldwide, 1990-2010: a systematic analysis. Lancet Glob Health 2013;1:e339-49. [Crossref] [PubMed]

- Schneider J, Leeder SR, Gopinath B, et al. Frequency, course, and impact of correctable visual impairment (uncorrected refractive error). Surv Ophthalmol 2010;55:539-60. [Crossref] [PubMed]

- Ma Y, Qu X, Zhu X, et al. Age-Specific Prevalence of Visual Impairment and Refractive Error in Children Aged 3-10 Years in Shanghai, China. Invest Ophthalmol Vis Sci 2016;57:6188-96. [Crossref] [PubMed]

- Trevethan R. Sensitivity, specificity, and predictive values: foundations, pliabilities, and pitfalls in research and practice. Front Public Health 2017;5:307. [Crossref] [PubMed]

- Barugel R, Touhami S, Samama S, et al. Evaluation of the Spot Vision Screener for children with limited access to ocular health care. J AAPOS 2019;23:153.e1-153.e5. [Crossref] [PubMed]