The use of explainable artificial intelligence to explore types of fenestral otosclerosis misdiagnosed when using temporal bone high-resolution computed tomography

Introduction

According to the World Health Organization (WHO), over 5% of the world’s population (about 466 million people) have disabling hearing loss and it is estimated that by 2050 over 900 million will have disabling hearing loss (1). Hearing loss has a variety of causes including genetic causes, complications at birth, infectious disease, chronic ear infections, the use of particular drugs, exposure to excessive noise, and aging. Any disease that can affect sound transmission may lead to hearing loss, of which otosclerosis (OS) is one that is both easy to be ignored and leads to progressive hearing loss.

Known also as otospongiosis, OS is an inner ear disease characterized by primary cavernous degeneration of the labyrinthine cysts. Clinical OS is not uncommon and occurs most commonly among Caucasians with an incidence of 0.3–1.2%, followed by Asians around 0.006–0.5% (2-4). Histologically, the incidence of OS is about 2.5%, which is much higher than the clinical incidence (5-10). The most frequent type of otosclerosis was fenestral otosclerosis, accounting for 91.8% of otosclerosis. It can be inferred that the incidence of fenestral otosclerosis is about 0.005% to 0.5% in clinic.

OS can be divided into stapedial OS, cochlear OS, and mixed OS based on the different locations and scope of the lesions. Cochlear OS is the terminal form of OS, which is not difficult to diagnose because of its typical clinical and CT manifestations, and the treatment is limited to the wearing of hearing aids or cochlear implantation (11). OS first effects and is most commonly found in the anterior area of the vestibular window, which causes stapedial OS, resulting in conductive hearing loss due to fixation of the stapes footplate. In addition to the diagnosis of OS based on clinical symptoms, signs, and audiological examination, the diagnostic value of high-resolution CT has also been widely recognized. With a positive diagnosing rate of 74% to 95.1% (10,12,13), high-resolution computed tomography (HRCT) is considered the first choice for the diagnosis of OS.

However, stapedial OS is often misdiagnosed, and most likely to be misdiagnosed as sensorineural deafness, congenital stapes fixation and tympanosclerosis. Missed diagnosis can also occur when combined with other ear diseases such as chronic otitis media (COM). According to Huang’s retrospective study of 37 cases, the incidence of misdiagnosis of fenestral otosclerosis is around 27% (14). Several reasons may account for this. Firstly, the clinical manifestation of stapedial OS is not typical, and most patients see a doctor with simple hearing loss. Secondly, compared with common ear diseases such as chronic suppurative otitis media and middle ear cholesteatoma, the incidence of OS is relatively low. Thirdly, and most importantly, although temporal bone CT is a highly specific examination in otology, stapedial OS lesions are not obvious using this method because the lesions are very small. A misdiagnosis may carry serious implications as untreated hearing loss may affect the ability of patients to communicate with others, hinder their daily activities and ability to work, and cause loneliness and depression. Wrongly diagnosed conditions may also subject patients to potentially unnecessary or harmful medical treatment.

Multiple etiologies have been postulated, including, genetic, hereditary, sex, ethnicity, pregnancy, and viral infections. Sodium fluoride is prescribed to slow the disease, however, the efficacy is still controversial. Surgical correction of sound transmission disorder caused by stapes fixation is an effective method to improve hearing. Also, hearing aids are used in many patients to improve their hearing. The timely and accurate diagnosis of stapedial OS renders successful treatment with stapes implantation more likely and often results in the recovery of complete normal hearing function. Therefore, it is necessary to design an intelligent diagnosis system based on temporal bone CT scans to improve stapedial OS diagnostic efficiency.

In recent years, artificial intelligence (AI) technology represented by deep learning has achieved great success in various fields. Deep learning is a machine learning technique that automatically learns the most informative and representative features from input images given a large data set of labeled examples, which avoids man-induced factors such as extensive preprocessing and extraction of handcrafted visual features. This technique uses an optimization algorithm called back propagation to indicate how a machine should change its internal parameters to best predict the desired output of an image. Recently, introducing deep learning technique into computer-aided detection or diagnosis (CAD) has attracted great attention and is considered to be one of the revolutionary directions for future medical development.

Deep learning based CAD of CT abnormalities has achieved great success in various fields (15,16) such as integrating chest CT with other medical information for detecting patients with COVID-19 (17), lung nodule detection based on CT images (18), monitoring organs at risk delineation in CT images (19), and low-dose CT image reconstruction for improving lesion detectability (20). However few studies have been devoted to diagnosing ear diseases using CT volume. Mei et al. developed an approach for rapidly diagnosing disease by using chest CT and the clinical history (17) and achieved an area under the curve (AUC) of 0.92 and a sensitivity of 84.3% when tested on 279 patients. These results showed chest CT is an important method for screening early suspected COVID-19 infection patients. Hwang et al. used a deep learning model to classify 54,221 chest radiographs into normal and abnormal, and achieved an AUC of 0.979 (0.973–1.000) for image classification and 0.972 (0.923–0.985) for lesion detection (21). Shan et al. proposed an iterative CT enhancement deep neural network for improving low-dose CT image quality (20) and demonstrated a superior visual quality compared with three commercial algorithms. Collectively, these studies demonstrate the feasibility and effectiveness of utilizing CT images for disease diagnosis.

The CT value of the fissula ante fenestram of patients with stapedial OS is significantly lower than that of normal people, and developing a diagnostic system of stapedial OS based on AI is a promising choice. However, there are few studies on AI in the diagnosis and treatment of otological disease using CT images. To date, only one retrospective study based on temporal bone CT has described the application of AI in the distinction between COM and middle ear cholesteatoma (22). This is because the imaging manifestations of ear diseases are much smaller in size than those of other diseases. Therefore, it is a great challenge to train an AI model to detect stapedial OS lesions because lesions are extremely “indistinct” in CT.

We conducted a study to assess the clinical performance of otolaryngologists to diagnose OS and made an in-depth analysis of the results. We found that otolaryngologists have a clear concentration tendency to diagnose some ear samples and based on this finding, we then subdivided the types of OS and newly defined them as focal, transitional, and typical fenestral OS. We contend this new fine-grained classification can provide a guide for screening OS in clinical settings. In addition, the unsatisfactory results of otolaryngologists to diagnose OS prompted us to develop a novel explainable OS localization deep neural network [otosclerosis-Logical Neural Network (LNN)] for the automatic diagnosis of fenestral OS in temporal bone high-resolution CT images. The otosclerosis-LNN model demonstrates a promising diagnosis result that outperforms the diagnostic level of otolaryngologists. Interestingly, it also shows a similar distribution in the diagnosis of our newly defined fenestral OS types on the test set. Finally, we used the otosclerosis-LNN model to assist all otolaryngologists and found this improved their diagnosis level, especially for focal and transitional fenestral OS which had an initially high misdiagnosis rate.

Our study is the first to apply deep learning techniques to extract the region of interest from whole-volume HRCT scans of temporal bones for diagnosing fenestral otosclerosis. We validated the proposed model to be an effective computer-aided diagnosis model of fenestral otosclerosis in a large-scale study containing 31,774 CT slices from 144 patients. The model achieved an AUC of 99.5% (per-ear-sensitivity of 96.4%, per-ear-specificity of 98.9%) on the collected test set, indicating great diagnostic performance. Furthermore, we used this model to assist otologists and observed a consistent and significant improvement in their diagnostic performance. We present the following article in accordance with the STARD reporting checklist (available at http://dx.doi.org/10.21037/atm-21-1171).

Methods

Dataset collection

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by institutional committee of Eye and ENT Hospital, Fudan University (No. 2020005) and informed consent was taken from all the patients. All authors had access to the study data and reviewed and approved the final manuscript. All training CT scans were collected from the central CT room of Eye and ENT Hospital, Fudan University and were carried out by a 128-channel multidetector SOMA TOM Definition Edge CT scanner (Siemens Inc., Munich, Germany). Each CT scan contained the complete structure of the temporal bone. Axial sections of 0.6 mm thick were obtained with the following CT scanner settings: collimation of 128 mm × 0.6 mm, field of view of 220 mm × 220 mm, pitch of 0.8 mm, matrix size of 512×512, voltage of 120 kV, and current of 240 mAs. The number of axial CT slices in each scan was around 100 and all images were downloaded from the Cloud server and saved in a 512×512 size and DCM format for training.

A total of 994 cases in the training set who received artificial stapes implantation in the central area of Eye and ENT Hospital, Fudan University from July 16, 2014, to November 13, 2019, were identified (see Figure 1 and Table S1). Among them, 712 cases that underwent one or more CT scans were selected, and a total of 794 scans were available for analysis. After removing the normal/cochlear OS/mixed OS ears and operated ears, 990 ears of stapedial OS CT were obtained. Finally, we sought cases with external auditory meatus tumor/new organism resection in the central area of Eye and ENT Hospital, Fudan University from July 16, 2014, to November 13, 2019. We obtained 775 cases, determined 300 cases, removed the suspected middle ear/inner ear disease ear CT scans by checking the medical history and examination results, and finally pretreated 341 ears of CT scans as a normal control study.

All testing CT scans were collected from the new CT room of Eye and ENT Hospital, Fudan University from December 1, 2019, to April 30, 2020. These scans were carried out by a 128-channel multidetector SOMA TOM Definition Flash CT scanner (Siemens Inc., Munich, Germany) and with the same CT scanner settings, axial sections of 0.6 mm thick were obtained. All images were downloaded from doctors’ workstation and saved in a 512×512 size and JPG format for testing.

Manual labelling

To train the otosclerosis-LNN model, we manually annotated the ear axis CT in the training set and developed a Bounding-Box Marker annotation software to facilitate the annotation process of otologists. Each CT was then preprocessed, split into left and right ears, and combined with the preprocessing algorithm to determine a rectangular clipping box. This was to ensure that the structure of the inner ear, middle ear, and outer ear could be displayed on each level, thus removing irrelevant areas and enlarging related areas to facilitate further labelling. For stapedial OS region labelling, otologists consulted the case information (mainly surgical records) and image reports to ensure the accuracy of labelling and annotated each CT slice with special attention to the vestibular window area. For the reduced bone mineral density shadow (the location of stapedial OS), experienced doctors used a rectangular red box to mark as close as possible to the focus and after the annotation of each mark, a new folder was be generated for the AI model to learn. The non-OS CT samples were then annotated. We first ensured there was no OS by carefully checking the medical history and imaging reports, then marked a rectangular area around the stapes footplate with a green box because the location of stapedial OS is relatively fixed. This space is relatively large, covering the possible location of stapedial OS lesions, and contains more labelling layers, covering the possible layers of fenestral OS. Similarly, the annotation result of each ear CT image was placed in the newly generated folder for AI model training.

Model architecture

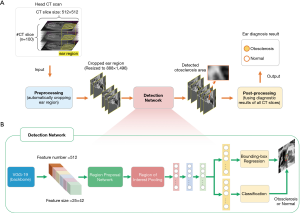

The main purpose of this study was to develop an explainable OS localization deep neural network (otosclerosis-LNN) for the detection and triage of ears based on whole-volume temporal bone HRCT scans. The average number of CT slices per patient was 103, and only about 3% contained lesions, indicating CT slices containing fenestral OS accounted for a very small proportion of the total number of CT slices in the collected dataset. The stapedial OS region is also small in the whole CT slice, accounting for less than 0.2%, resulting in a severe class imbalance problem. To deal with this, we decomposed the fenestral OS diagnosis of ear CT volume diagnosis into three stages; a pre-processing stage, OS detection network, and post-processing stage (Figure 2A,B). The first stage is a conventional image processing algorithm, which is used to filter out non-ear CT slices and automatically crop out areas that may be ears and there was a relative class balance between normal and fenestral OS in the cropped ear slices. Following this, the cropped ear regions were fed into the deep detection network for localizing the OS area. There will usually be several continued slices and discrete slices of the input 3D head CT scan detected including OS lesions and normal structure. Therefore, the post-processing stage was to heuristically fuse all CT diagnosis results, producing the final ear diagnosis result, which will be normal or fenestral OS.

Pre-processing stage

A conventional image processing algorithm was developed to automatically crop out ear regions from the temporal bone HRCT slices. The first step was based on the first temporal bone CT image, and the algorithm binarized this with a brightness threshold of T1=50. Those regions with brightness greater than T1 were maintained and resized to 122×364 using the Bicubic algorithm. The right and left ear areas in those regions were then cropped out in the pattern of [x1, y1, x2, y2], where [1, 181, 184, 295] were for the right ear and [184, 185, 364, 295] were for the left ear. Finally, the cropped ear areas were resized to 888×1,496 and normalized to 0–1 facilitating the detection of the following detection network.

Detection network

The detection network was used to detect fissula ante fenestram areas in the cropped ear CT slices produced by the pre-processing stage. The overall network structure of the detection network was mainly based on the Faster-RCNN model (23), which is a classic deep network model for detecting natural objects. We modified this by replacing its backbone using the pre-trained VGG-19 model (24), which helped to extract more general and discriminative visual features (512×25×42). These visual features were used to generate the features of each bounding box region in the input CT slice by the region proposal network (RPN) and region of interest pooling (RoI Pooling) (23,25). By performing bounding box regression and classification, we obtained the OS detection results for each CT slice. In general, there were multiple continued slices and discrete slices of the input 3D head CT scan detected including OS lesions. Therefore, we needed a post-processing stage to heuristically fuse all CT detection results, producing the final ear diagnosis result, which was normal or OS.

Post-processing stage

This stage output the final ear diagnosis result (normal or fenestral OS) by fusing the detection results of all CT slices. Firstly, those CT slices with a low probability of normal and OS bounding box (less than threshold P1=0.5) were filtered out. The purpose of this step was to make a preliminary screening and directly exclude the results with a significantly low confidence level, thus avoid the selection of the subsequent longest subsequence. Therefore, the threshold setting could be relatively relaxed. The subsequence with the longest consecutive CT layers was then selected from the remaining stratification results. If there were multiple subsequences with the same length, the one with higher maximum confidence was selected. Since the fissula ante fenestram area is continuous, the purpose of this step was to determine the area of the stapes footplate in the CT layer. Next, the confidence of the results in the subsequence was filtered, and all the results whose confidence probability was less than the threshold P2=0.99 were eliminated. The purpose of this step was to select the most valuable results in the subsequence for subsequent diagnosis, so the threshold setting should be relatively high. Finally, we calculated the ratio between the number of slices of the selected subsequences detected as lesions and the total number of remaining slices. We considered that stapedial OS was present when the ratio was greater than 0.25 and if not, the ear was considered to be normal. The purpose of this step was to synthesize all CT slice results to obtain the final diagnosis result.

Training details

The otosclerosis-LNN model was trained on the collected training set. Data augmentations such as random horizontal flipping and adding noise were employed in the training process to enhance the generalization ability of the model. We used the Adam optimizer (26) with a small initial learning rate 1e-5 to minimize the bounding box regression and classification losses. The output of the otosclerosis-LNN model represented the probability that the CT slices were OS or normal; if the probability was greater than 0.5, the slice was classified as fenestral OS and otherwise, as normal. We trained the otosclerosis-LNN model on a Nvidia RTX 2080Ti graphics processing unit with 8 Gb of memory and Intel i5-8400@2.80GHz with 24GB RAM with the training batch size set to 4. The number of training iterations was 269 epochs, which took about three days (68 hours) and the model was implemented with the PyTorch library (27) and Python 3.7 language.

Statistical analysis

We evaluated the performance of our otosclerosis-LNN model to assess its potential clinical utility by prospectively collecting an external test set. Given the temporal bone HRCT volume of an ear, the model localized the position of OS lesions in the input CT volume and output its probability that the ear was fenestral OS or normal. According to the setting confidence for CT slices, ROC was obtained. The sensitivity, specificity, accuracy, PPV, and NPV of this approach on the external test set were 96.43%, 98.86%, 98.07%, 97.59%, and 98.30%, respectively. In addition, we compared the otosclerosis-LNN approach with seven otolaryngologists (two chief physicians, three associate chief physicians, one attending doctor, and one resident doctor) on the clinical test set. Each otolaryngologist independently classified each case as fenestral OS or normal, there was no limit to the screening time of each doctor, and only the diagnostic accuracy was assessed. Finally, we evaluated the ability of the otosclerosis-LNN model to assist otolaryngologists in diagnosis. All otolaryngologists were blind to the performance of the model and after three months, all participants were invited to classify all cases in the clinical test set after referring to the diagnostic results of the model.

Results

Study of otolaryngologists’ performance in clinically diagnosing OS

To explore the clinical performance of otolaryngologists in diagnosing OS, we invited seven otolaryngologists to assess their diagnostic level of stapedial OS on the collected test set (see Figure 3 for the collection process). Participants included two chief physicians with over 20 years of experience, two associate chief physicians with about 15 years of experience, one associate chief physician with about 10 years of experience, one attending physician with about 10 years of clinical experience, and one resident with about 2 years of experience. Each doctor was required to independently diagnose stapedial OS and normal temporal bone by only reading CT scans in the absence of other clinical information. It is important to note that before we invited the seven doctors to participate in the test, we also invited other doctors who had just entered the hospital or had worked for 5 years to take the test. As most of the latter group did not know the CT manifestation of fenestral OS, their results were a right or wrong guess, which fully reflected the challenges in the clinical diagnosis of fenestral OS and the high diagnostic level of the seven invited otolaryngologists.

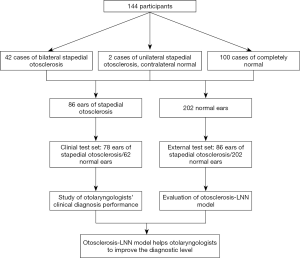

The external test set was prospectively collected as shown in Figure 3. By April 30, 2020, a total of 42 cases of bilateral stapedial OS and two of unilateral stapedial OS were collected (see Table S2). The operative ear diagnosis was based on what was seen during the operation, which was considered as the gold standard. The contralateral ear diagnosis was based on the comprehensive analysis of imaging experts reports and medical history, forming 86 ears with stapedial OS in the external test set. In addition, the information of outpatients in new area of Eye and ENT Hospital, Fudan University in April 2020 was collected, and 100 cases of completely normal CT images were included in the test set according to the diagnosis report of imaging experts combined with clinical manifestation and examinations.

In summary, the external test set consisted of 86 ears of stapedial OS and 202 normal ears. To reduce the diagnostic deviation caused by fatigue in reading the images, we randomly selected 30 cases from 100 cases with normal CT and 40 cases from 44 cases with stapedial OS, finally forming the clinical test set for doctors to read as 78 ears of stapedial OS mixed with 62 normal ears. The prospective and complete collection of OS cases admitted in the new area of Eye and ENT Hospital, Fudan University began on December 1, 2019 and was part of our project Artificial intelligence in diagnosis and treatment decision-making of middle ear diseases based on temporal bone CT scans (Registration number: ChiCTR1900027535) and strictly carried out in accordance with the plan. This trial was verified on November 17, 2019, and detailed information can be seen on the website, Chinese Clinical Trial Registry.

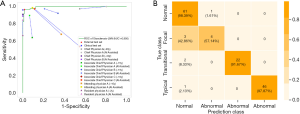

This clinical study demonstrated the performance of otolaryngologists to diagnose stapedial OS was unexpectedly low (see Figure 4). The two chief physicians achieved the best diagnostic average results in terms of sensitivity and accuracy (Figure S1 and Table S3) and the two associate chief physicians achieved average sensitivity and specificity of 70.07% and 67.70%, respectively. The attending physician (~10 years) achieved sensitivity and specificity of 67.90% and 62.90%, respectively, and the resident (~2 years) achieved sensitivity and specificity of 66.70% and 100%, respectively. The results show that while compared with other doctors, the chief physicians demonstrated a higher diagnostic level in the test set, there is still much room for improvement and that misdiagnoses occur frequently.

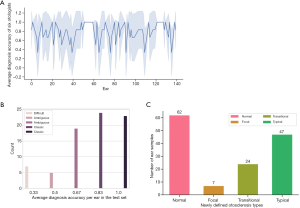

Defining new lesion types of fenestral OS based on the analysis of otolaryngologists’ diagnosis results

The unsatisfactory performance of otolaryngologists promoted us to further analyze what types of fenestral OS led to misdiagnoses. We counted the diagnosis results of each ear by each doctor in the test set and obtained the average diagnosis accuracy and variance of each ear (Figure 5A). It should be noted that we did not include the diagnosis of the attending physician in the definition process. The attending physician pays more attention than others to the facial nerve, as he believes that the fenestral OS will blur the horizontal segment of the facial nerve in images. Although this has a certain diagnostic efficacy, we believe that only typical fenestral OS will have this manifestation. As a precaution, we removed his diagnosis during the definition process, and the diagnosis of the other six otologists contributed to this new subdivision. According to their average diagnostic accuracy, we further classified the fenestral OS lesions (see Figure 5B) with an average diagnostic accuracy of 0.33, 0.5 or 0.67, 0.83, or 1.0 as “focal”, “transitional”, or “typical” fenestral OS, respectively. These three types were highly related to the misdiagnosis rate of otologists, and we counted the number of these three types of OS in the test set (Figure 5C and Figure S2).

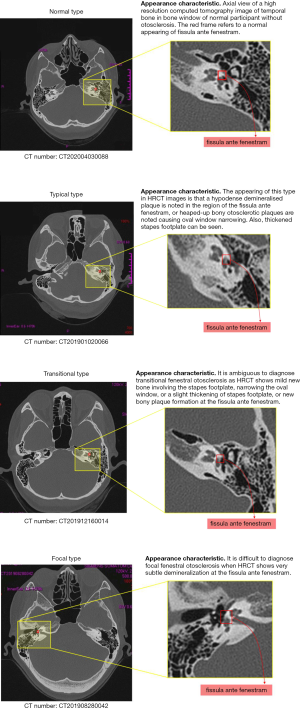

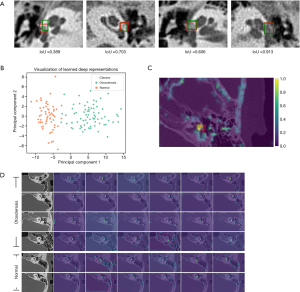

We found that there was an obvious concentration trend in the diagnosis results of doctors for some ear samples. For example, some ears were misdiagnosed by most otolaryngologists, and some were correctly diagnosed with high confidence. The diagnosis results of some ears showed large variance, indicating that the otolaryngologists have differed considerably in the diagnosis of these ears. Based on this finding, we brought these CT images to experienced otologists for further discussion and study. The otologists found that these images with different diagnostic accuracy demonstrated a relatively slight appearance difference (see Figure 6).

The appearance of typical fenestral OS in high-resolution CT (HRCT) images is of a hypodense demineralised plaque in the region of the fissula ante fenestram, or as heaped-up bony otosclerotic plaques causing oval window narrowing. Thickened stapes footplate can also be seen. Transitional fenestral OS is ambiguous to diagnose as HRCT shows mild new bone involving the stapes footplate, narrowing of the oval window, a slight thickening of the stapes footplate, or new bony plaque formation at the fissula ante fenestram. Focal fenestral OS is also difficult to diagnose because the HRCT shows very subtle demineralization at the fissula ante fenestram and there is no thickening of the stapes plate.

Development and validation of an OS localization deep neural network based on temporal bone high-resolution CT images

The unsatisfactory results of the study evaluating the performance of otolaryngologists in clinical diagnosis results caused us to develop a CAD system to help otologists obtain a better diagnosis performance. For this purpose, we developed an explainable OS localization deep neural network (otosclerosis-LNN) for the detection and triage of ears based on whole-volume temporal bone HRCT scans (see Figure 7A,B for detailed model architecture). To develop the model, we collected 134,574 CT slices from 1,294 patients who underwent a CT examination at Eye and ENT Hospital, Fudan University from July 16, 2014, to November 13, 2019 (Table S3). All scans were performed by radiologists using a standard chest CT protocol and the dataset included 994 OS patients and 300 normal patients (see Figure 1 for the collection process). All CT scans were preprocessed, split into left and right ears, and each CT image was cut into a rectangular region (containing ear structure) to facilitate further labelling of the lesions.

In the labelling process, we carefully checked the case information (mainly surgical records and imaging reports), eliminated normal/cochlear OS/mixed OS temporal bone CTs, and finally obtained 990 ears of stapedial OS. The label (diagnosis) of the surgical ear was marked according to the intraoperative diagnosis while for the unoperated ear, the label was a comprehensive diagnosis based on imaging reports of an imaging expert and medical history. The average number of CT slices per patient was 103, and only about 3% contained visible lesions. Additionally, the proportion of fenestral OS in the total number of CT slices of temporal bone was 1.83% (see the rightmost figure in Figure S3). Such a small proportion indicates that CT slices containing fenestral OS account for a very small proportion of the total number of CT slices in the collected dataset and shows the number of normal and non-ear CT slices is large and represents a serious class imbalance problem. Class imbalance typically poses a great challenge to designing model structures (25,28), updating model parameters for convergence, and maintaining the balance of test performance for each category.

To deal with this extreme class imbalance problem, we employed an end-to-process strategy that could effectively turn this into a class balance problem by fully exploiting the advantages of conventional image processing algorithms and deep learning technology. Specifically, we used ear CT slices instead of whole temporal bone CT slices to train the deep learning-based OS detection network because the proportion of fenestral OS in the total number of ear CT slices of temporal bone was 71.21% (see the rightmost figure in Figure S3). Therefore, the class imbalance problem was largely alleviated.

To evaluate the effectiveness of our otosclerosis-LNN, we conducted the fenestral OS localization experiment on the collected test set containing 31,774 CT slices from 288 ears (see Figure 3 and Table S1). The whole-volume temporal bone HRCT scans were fed into the otosclerosis-LNN model. The model then output the positions of fenestral OS in the inputted ear CT scans and the classification result of normal or fenestral OS for that ear. The AUC, sensitivity, and specificity of our otosclerosis-LNN approach were 99.5%, 96.4%, and 98.9%, respectively. Figure 7A and Figures S4,S5 show the comparison with all otolaryngologists, which had a higher screening performance than both the two chief physicians (~20 years) and other doctors.

It is also interesting to understand the diagnostic performance of the otosclerosis-LNN model for our newly defined three types of fenestral OS mentioned in the above section. We demonstrated another type of “confusion matrix” (see Figure 7B), where the vertical axis denotes the true class containing four types and the horizontal axis denotes the predicted class containing only two types (normal or abnormal) because the output of the otosclerosis-LNN model is two types, i.e., normal or fenestral OS. This new type of confusion matrix clearly demonstrated the detailed prediction results for the newly defined fine-grained OS types. The matrix demonstrated that the otosclerosis-LNN model achieved good diagnostic performance for transitional and typical types, while showing ambiguous judgment when dealing with focal ear CT scans. This result indicates that compared with doctors, the improvement of diagnostic performance of the model is mainly due to the improvement of diagnostic accuracy on the transitional ears. Simultaneously, we observed a gradual decline trend of the diagnostic accuracy of the model for typical, transitional, and focal types, which indirectly indicated the further classification of fenestral OS discussed in the above section was reasonable. In addition, we found that the serious class imbalance problem did not lead to significant class bias in the test results of our otosclerosis-LNN model.

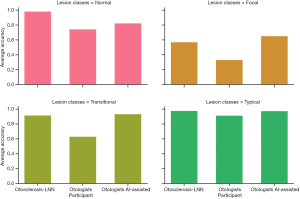

Improving the ability of otolaryngologists to screen fenestral OS with the assistance of the otosclerosis-LNN model

We further conducted an experiment using the otosclerosis-LNN model to assist otolaryngologists in screening fenestral OS in temporal bone CT scans. Firstly, we set up a week for memory washing out, then after three months, we invited the seven otolaryngologists to again screen fenestral OS in the temporal bone CT scans of the test set with reference to the results of the otosclerosis-LNN model while the diagnostic performance of the otosclerosis-LNN model was blind to the otolaryngologists. The experimental results (see Figure 8, Figure S6 and Table S4) demonstrated that the diagnostic performance of all otolaryngologists was significantly improved with the assistance of the otosclerosis-LNN model. The category of significant improvement was in the focal and transitional ear samples, probably because these two types are the main reasons for the high rate of misdiagnoses. Overall, the average diagnostic ability of the seven otolaryngologists improved in all lesion types.

Explanation of the detection network in the otosclerosis-LNN model

The interpretable artificial intelligence in our study means that the proposed diagnostic model not only provides diagnostic results, but also provides diagnostic basis for doctors. The diagnosis basis we provide includes three aspect. The neural responses learned by the detection network are visualized, showing a pleasing phenomenon that the areas highlighted by the detection network are exactly the fissula ante fenestram areas carefully labelled by experienced otologists. The learned lesion representation is visualized on 2D plane by using PCA to reduce its dimension. The visualization result shows that the normal and fenestral otosclerosis ear samples are well separated by the diagnostic model. Most importantly, compared to previous deep learning-based diagnosis works that only output a diagnostic result with a probability, our diagnostic model not only outputs the diagnostic result of normal or fenestral OS but also outlines the possible lesion regions in CT slices. This greatly increases its applicability in clinical practice and helps doctors understand the diagnosis basis of the model.

To understand why the otosclerosis-LNN model works, we visualized the neural responses learned by the detection network in the model. To visualize this, we performed a forward inference of the model for inputting HRCT slices, then randomly selected six feature maps outputted by the convolutional layers before the final fully connected layer. This experiment was conducted on an independent test set and the results (see Figure 9) demonstrated the areas focused by the detection network were the fissula ante fenestram areas carefully marked by experienced otologists (Figure 9C,D). This explains to a certain extent why the model could accurately diagnose lesions around the stapes footplate. In addition, after using PCA to reduce the feature dimension of the classification layer and visualizing it on a 2D plane, we observed that normal and fenestral OS ear samples were well divided (see Figure 9B).

Discussion

Several AI-based CAD approaches have been developed to assist doctors in recent years, such as predicting the prognosis of lung cancer patients (18,29), detecting abnormality in lower extremity radiographs (21,30), delineating all organs at risk (19), and predicting drug-protein interaction (19). While compared with these rapidly developing medical directions, the research on otology diagnosis based on AI is still in its infancy, we took a substantial step forward by proposing a novel explainable OS localization deep neural network for the detection and triage of ears based on temporal bone HRCT. The proposed otosclerosis-LNN model, involving supervised training on a collected dataset containing 134,574 CT slices from 1,331 patients, demonstrated a favorable performance for automated OS localization in three-dimensional temporal bone CT scans. The model achieved an AUC of 99.5% (per-ear-sensitivity of 96.4%, per-ear-specificity of 98.9%) on the collected test set containing 31,774 CT slices from 144 patients, which is an encouraging result in view of the high variability in input CT slices such as the diversity of patients, and the presence of various, and unlabeled other abnormalities.

This study is the first to apply deep learning technique to extract the region of interest from whole-volume high-resolution CT scans of temporal bones for diagnosing fenestral OS. Previous diagnostic works based on deep learning paid less attention to otological diseases and more to the skin, breast, lung, liver, retina, esophagus, and colon (18,19,21,29,31-38). Some diagnostic works for ear disease achieved promising results such as the diagnosis of COM based on temporal bone CT scans using deep learning (22), diagnosis of secretory otitis media with machine learning algorithm (22), diagnosis of ear diseases based on otoscope images using ensemble deep models (39), and prediction of hearing and speech perception in children with cochlear implants using AI technology (40). However, there are no studies devoted to diagnosing fenestral OS lesions, which is the most likely ear disease to be misdiagnosed and has the most obvious therapeutic effect. In addition, because the model can automatically extract all stapes footplate regions in CT scans, the potential application of the otosclerosis-LNN model can be used not only for the diagnosis of fenestral OS, but for the diagnosis of other otological diseases in the region of the stapes footplate.

We conducted a detailed analysis of the diagnosis results of otolaryngologists in the clinical diagnostic study and observed that there was a significant trend of concentrated diagnosis for some ear samples through statistics. Based on the statistical results, we further subdivided stapedial OS into focal, transitional, and typical types and found that the otosclerosis-LNN model also showed similar diagnostic results in these three OS types, evidenced by the diagnostic accuracy gradually decreasing from the typical type to the focal type. This result indirectly demonstrated the rationality of the fine-grained classification for fenestral OS. Our newly defined OS types can provide a guide for fenestral OS diagnosis in clinical practice.

In addition, our otosclerosis-LNN model employs an end-to-process strategy instead of end-to-end deep neural networks widely used in existing works. CT scans-based on end-to-end diagnostic approaches (20,23,29,36,41-43) can output desired results by directly inputting the original CT slices. Researchers only need to focus on how to design an appropriate deep network structure and define corresponding objective loss to optimize network parameters. The advantage of the end-to-end model is that all processing works can be transferred to the model and the disease diagnosis model can be trained by using a large amount of training data and time. Its disadvantage is that little human prior knowledge is incorporated into the diagnosis process. When dealing with the problem of the serious imbalance of categories, the end-to-end model needs to design a more complex network to deal with the categories with fewer samples, and the model is often difficult to converge. In contrast, the end-to-process strategy employed in our model is a combination of a heuristic image processing algorithm and deep learning technique, which allows a deep learning-based detection network to make full use of its advantages in dealing with the category imbalance problem. The superior performance of the otosclerosis-LNN model, including high sensitivity, high specificity, and low computational time, demonstrates that the end-to-process strategy is a reasonable choice for the OS localization task. It is hoped that this strategy can provide a good reference for other diagnostic work in model design, especially when dealing with extremely imbalanced categories.

Compared to previous deep learning-based diagnosis works (13,17,18,30,36,39,40,44,45), which only output a diagnostic result with a probability, our otosclerosis-LNN model not only outputs the diagnostic result of normal or fenestral OS but also outlines the possible lesion regions in CT slices. This greatly increases its applicability in clinical practice and helps doctors understand the diagnosis basis of the model. For example, for abnormal examinations, the model can provide valuable diagnostic evidence for otologists, which encourages them to quickly check the abnormal areas on the CT slices suggested by the model. The model can quickly identify normal examinations with a high confidence score of thresholds, allowing otologists to allocate more time and energy to abnormal and complex cases.

The proposed otosclerosis-LNN model evaluated on the collected test set performs comparably or favorably compared to chief physicians (~20 years) and other otologists. The test results of the seven otologists invited into the comparison study showed that in addition to the two chief physicians, the diagnostic sensitivity and specificity of other doctors have much room for improvement. This result also reveals an important message that the diagnostic conclusions of doctors may not be as accurate as they think, especially for junior and middle-level doctors. Therefore, it is of great significance to build clinically applicable CAD systems for assisting doctors by using deep neural networks which imbue the rich clinical experience and knowledge of senior doctors. Such a diagnostic system is particularly important for areas with scarce medical resources. To demonstrate the effectiveness of the CAD system, we conducted an experiment using it to assist doctors in diagnosis. The experimental results demonstrated most otologists, including chief physicians, achieved significant improvement in terms of sensitivity, specificity, and accuracy.

Our study has some limitations. Firstly, we have evaluated the otosclerosis-LNN model on the collected test set from two different institutions, showing encouraging results. However, the performance of the model may vary in different hospitals, ways of operating, patient differences, and imaging equipment. In addition, the model outputs the binary classification results of normal or fenestral OS based on the automatically detected fissula ante fenestram space in three-dimensional HRCT images and further studies are needed to diagnose other diseases in the stapes footplate region. Finally, otologists often combine various patient information such as CT scans, clinical symptoms, medical records, and audiological examination in clinical diagnosis. Therefore, a CAD model based on deep learning can further consider combining multi-modal information and learn how doctors synthesize this to make a final diagnosis. Despite these limitations, the achieved diagnostic performance of our otosclerosis-LNN model is encouraging in that it is either comparable or better than that of senior otologists. Furthermore, otologists can significantly improve their diagnostic level when assisted by the otosclerosis-LNN model. The diagnosis of ear diseases based on deep learning techniques is rare, and far behind other fields. It is now time to advance this field and design AI diagnosis models to help otologists perform routine clinical diagnosis, thus effectively reducing their diagnostic burden and avoiding “harmful” misdiagnosis.

In conclusion, we have presented a deep learning-based OS diagnostic model that can automatically localize OS lesion regions in three-dimensional HRCT scans. Though, our artificial intelligence technology has not been used in clinic for the time being, it shows superiority compared with the test results of doctors and plays an auxiliary role in existing cases. With further verification by randomized controlled trials, the model may eventually enter into clinical application and be used to assist otologists in automatically and quickly diagnosing fenestral OS.

Acknowledgments

Funding: This work was supported by the National Natural Science Foundation of China (No. 61772137, 61902076, 81670281, 81570919), the big data and artificial intelligence project (2020DSJ07), the Shanghai Municipal Commission of Science and Technology Research Project (18140900304, 19140900902), and the clinical research and transformation incubation project (SZA2020004).

Footnote

Reporting Checklist: The authors have completed the STARD reporting checklist. Available at http://dx.doi.org/10.21037/atm-21-1171

Data Sharing Statement: Available at http://dx.doi.org/10.21037/atm-21-1171

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/atm-21-1171). Dr. BY reports funding from the big data and artificial intelligence project (2020DSJ07), the Shanghai Municipal Commission of Science and Technology Research Project (18140900304, 19140900902), and the clinical research and transformation incubation project (SZA2020004). The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study was approved by institutional committee of Eye and ENT Hospital, Fudan University (No. 2020005) and informed consent was taken from all the patients.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Deafness and hearing loss: World Health Organization; 2020, updated March 1. Available online: https://www.who.int/zh/news-room/fact-sheets/detail/deafness-and-hearing-loss

- Altmann F, Glasgold A, Macduff JP. The Incidence of Otosclerosis as Related to Race and Sex. Ann Otol Rhinol Laryngol 1967;76:377-92. [Crossref] [PubMed]

- Morrison AW. Genetic factors in otosclerosis. Ann R Coll Surg Engl 1967;41:202. [PubMed]

- Declau F, Van Spaendonck M, Timmermans J, et al. Prevalence of histologic otosclerosis: an unbiased temporal bone study in Caucasians. Otosclerosis and Stapes Surgery. 65: Karger Publishers; 2007:6-16.

- Ealy M, Smith RJH. Otosclerosis. Adv Otorhinolaryngol 2011;70:122-9. [Crossref] [PubMed]

- Ealy M, Smith RJ. The genetics of otosclerosis. Hear Res 2010;266:70-4. [Crossref] [PubMed]

- Lee TC, Aviv R, Chen J, et al. CT grading of otosclerosis. Am J Neuroradiol 2009;30:1435-9. [Crossref] [PubMed]

- Stankovic KM, McKenna MJ. Current research in otosclerosis. Curr Opin Otolaryngol Head Neck Surg 2006;14:347-51. [Crossref] [PubMed]

- Chole RA, McKenna M. Pathophysiology of otosclerosis. Otol Neurotol 2001;22:249-57. [Crossref] [PubMed]

- Vicente AO, Yamashita HK, Albernaz PL, et al. Computed tomography in the diagnosis of otosclerosis. Otolaryngol Head Neck Surg 2006;134:685-92. [Crossref] [PubMed]

- Cureoglu S, Baylan MY, Paparella MM. Cochlear otosclerosis. Curr Opin Otolaryngol Head Neck Surg 2010;18:357. [Crossref] [PubMed]

- Lagleyre S, Sorrentino T, Calmels MN, et al. Reliability of high-resolution CT scan in diagnosis of otosclerosis. Otol Neurotol 2009;30:1152-9. [Crossref] [PubMed]

- Naumann IC, Porcellini B, Fisch U. Otosclerosis: incidence of positive findings on high-resolution computed tomography and their correlation to audiological test data. Ann Otol Rhinol Laryngol 2005;114:709-16. [Crossref] [PubMed]

- Huang TS. editor. A retrospective study of 37 cases. The Function and Mechanics of Normal, Diseased and Reconstructed Middle Ears: Proceedings of the Second International Symposium on Middle-Ear Mechanics in Research and Otosurgery, Held in Boston, MA, USA, October 21st-24th, 1999; 2000: Kugler Publications.

- Rusk N. Deep learning. Nat Method 2016;13:35. [Crossref]

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015;521:436-44. [Crossref] [PubMed]

- Mei X, Lee HC, Diao KY, et al. Artificial intelligence-enabled rapid diagnosis of patients with COVID-19. Nat Med 2020;26:1224-8. [Crossref] [PubMed]

- Mukherjee P, Zhou M, Lee E, et al. A Shallow Convolutional Neural Network Predicts Prognosis of Lung Cancer Patients in Multi-Institutional CT-Image Data. Nat Mach Intell 2020;2:274-82. [Crossref] [PubMed]

- Tang H, Chen X, Liu Y, et al. Clinically applicable deep learning framework for organs at risk delineation in CT images. Nat Mach Intell 2019;1:480-91. [Crossref]

- Shan H, Padole A, Homayounieh F, et al. Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose CT image reconstruction. Nat Mach Intell 2019;1:269-76. [Crossref] [PubMed]

- Hwang EJ, Park S, Jin KN, et al. Development and validation of a deep learning-based automated detection algorithm for major thoracic diseases on chest radiographs. JAMA Netw Open 2019;2:e191095 [Crossref] [PubMed]

- Wang YM, Li Y, Cheng YS, et al. Deep learning in automated region proposal and diagnosis of chronic otitis media based on computed tomography. Ear Hear 2020;41:669-77. [Crossref] [PubMed]

- Ren S, He K, Girshick R, et al. Faster r-CNN: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 2017;39:1137-49. [Crossref] [PubMed]

- Kim J, Kwon Lee J, Mu Lee K. editors. Accurate image super-resolution using very deep convolutional networks. Proceedings of the IEEE conference on computer vision and pattern recognition; 2016.

- Lin TY, Goyal P, Girshick R, et al. editors. Focal loss for dense object detection. Proceedings of the IEEE international conference on computer vision; 2017.

- Kingma DP, Ba J. Adam: A method for stochastic optimization. International Conference on Learning Representations, 2015.

- Chaudhary A, Chouhan KS, Gajrani J, et al. Deep Learning With PyTorch. Machine Learning and Deep Learning in Real-Time Applications: IGI Global; 2020:61-95.

- Buda M, Maki A, Mazurowski MA. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw 2018;106:249-59. [Crossref] [PubMed]

- Ardila D, Kiraly AP, Bharadwaj S, et al. End-to-end lung cancer screening with three-dimensional deep learning on low-dose chest computed tomography. Nat Med 2019;25:954-61. [Crossref] [PubMed]

- Varma M, Lu M, Gardner R, et al. Automated abnormality detection in lower extremity radiographs using deep learning. Nat Mach Intell 2019;1:578-83. [Crossref]

- Chen PC, Gadepalli K, MacDonald R, et al. An augmented reality microscope with real-time artificial intelligence integration for cancer diagnosis. Nat Med 2019;25:1453-7. [Crossref] [PubMed]

- De Fauw J, Ledsam JR, Romera-Paredes B, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med 2018;24:1342-50. [Crossref] [PubMed]

- Kather JN, Pearson AT, Halama N, et al. Deep learning can predict microsatellite instability directly from histology in gastrointestinal cancer. Nat Med 2019;25:1054-6. [Crossref] [PubMed]

- Skrede OJ, De Raedt S, Kleppe A, et al. Deep learning for prediction of colorectal cancer outcome: a discovery and validation study. Lancet 2020;395:350-60. [Crossref] [PubMed]

- Wu N, Phang J, Park J, et al. Deep neural networks improve radiologists’ performance in breast cancer screening. IEEE Trans Med Imaging 2020;39:1184-94. [Crossref] [PubMed]

- Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017;542:115-8. [Crossref] [PubMed]

- Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016;316:2402-10. [Crossref] [PubMed]

- Shen L, Zhao W, Xing L. Patient-specific reconstruction of volumetric computed tomography images from a single projection view via deep learning. Nat Biomed Eng 2019;3:880-8. [Crossref] [PubMed]

- Cha D, Pae C, Seong SB, et al. Automated diagnosis of ear disease using ensemble deep learning with a big otoendoscopy image database. EBioMedicine 2019;45:606-14. [Crossref] [PubMed]

- Papsin BC, Gordon KA. Cochlear implants for children with severe-to-profound hearing loss. New Eng J Med 2007;357:2380-7. [Crossref] [PubMed]

- Zheng S, Li Y, Chen S, et al. Predicting drug–protein interaction using quasi-visual question answering system. Nat Mach Intell 2020;2:134-40. [Crossref]

- Zhao Y, Chen Y, Bindel D. Towards unbiased end-to-end network diagnosis. IEEE/ACM Transactions on Networking 2009;17:1724-37. [Crossref]

- Silver D, Hasselt H, Hessel M, et al. editors. The predictron: End-to-end learning and planning. International Conference on Machine Learning; 2017: PMLR.

- Zheng X, Yao Z, Huang Y, et al. Deep learning radiomics can predict axillary lymph node status in early-stage breast cancer. Nat Commun 2020;11:1236. [Crossref] [PubMed]

- Quesnel AM, Moonis G, Appel J, et al. Correlation of computed tomography with histopathology in otosclerosis. Otol Neurotol 2013;34:22. [Crossref] [PubMed]

(English Language Editor: B. Draper)